Decoding Development: A Comprehensive Guide to Gene Regulatory Network Analysis from Single-Cell to Clinical Applications

This article provides a comprehensive overview of gene regulatory network (GRN) analysis in developmental biology, tailored for researchers, scientists, and drug development professionals.

Decoding Development: A Comprehensive Guide to Gene Regulatory Network Analysis from Single-Cell to Clinical Applications

Abstract

This article provides a comprehensive overview of gene regulatory network (GRN) analysis in developmental biology, tailored for researchers, scientists, and drug development professionals. It covers foundational principles, exploring how GRNs act as a crucial bottleneck between genotype and phenotype, defining cell fate and morphological changes. The scope extends to cutting-edge methodological approaches for GRN inference from single-cell and multi-omics data, including strategies to overcome technical challenges like data sparsity. The article further details rigorous validation frameworks and comparative analysis techniques for assessing network quality and identifying condition-specific regulatory differences. Finally, it explores the translation of these insights into clinical applications, including drug repurposing and the development of personalized therapeutic strategies for complex diseases.

The Blueprint of Life: Unraveling Core Principles of Gene Regulatory Networks in Development

Gene regulatory networks (GRNs) represent the complex, interwoven relationships between genes, their regulators, and the cellular processes they control. Understanding GRN architecture is fundamental to unraveling the mechanisms of development, cell identity, and disease pathogenesis. This article provides a structured overview of the methodological foundations for GRN inference, focusing on the evolution from statistical modeling to the integration of multi-omic single-cell data. We present standardized protocols for contemporary inference tools, detail essential research reagents, and benchmark performance of leading algorithms. Framed within developmental biology research, this guide aims to equip scientists with the practical knowledge to transition from computational predictions to biologically meaningful insights, thereby accelerating discovery in functional genomics and therapeutic development.

In eukaryotes, gene expression is carefully regulated by transcription factors, proteins that play a crucial role in determining cell identity and controlling cellular states by activating or repressing the expression of specific target genes [1]. The ensemble of these interactions forms a gene regulatory network (GRN), which coherently coordinates the expressions of genes and controls the behaviors of cellular systems [2]. The genomic program for development operates primarily through the regulated expression of genes encoding transcription factors and components of cell signaling pathways, executed by cis-regulatory DNAs such as enhancers and silencers [3].

The study of GRNs provides an integrative approach to fundamental research questions, bridging systems biology, developmental and evolutionary biology, and functional genomics [4]. Solved developmental GRNs from model organisms like sea urchins, flies, and mice have illuminated the structural organization of hierarchical networks and the developmental functions of GRN circuit modules [4] [3]. Modern sequencing technologies, particularly single-cell and single-nuclei RNA-sequencing, have revolutionized this field by enabling the resolution of regulatory heterogeneity across individual cells, opening new avenues for understanding the mechanistic alterations that lead to diseased phenotypes [1] [5].

Methodological Foundations for GRN Inference

GRN inference relies on diverse statistical and algorithmic principles to uncover regulatory connections. The choice of method depends on the research question, data type, and available prior knowledge [6] [5]. The table below summarizes the core methodological approaches, their underlying principles, and key considerations for use.

Table 1: Foundational Methodologies for Gene Regulatory Network Inference

| Method Category | Core Principle | Representative Algorithms | Best-Suited Data | Key Assumptions & Considerations |

|---|---|---|---|---|

| Correlation-Based | Measures association (e.g., Pearson, Spearman, Mutual Information) between expression of TFs and potential target genes. | WGCNA, PIDC [1] [5] | Steady-state transcriptomic data (bulk or single-cell). | Identifies co-expression but cannot distinguish direct vs. indirect regulation or infer causality. |

| Regression Models | Models a gene's expression as a function of multiple predictor TFs/CREs. Coefficients indicate interaction strength/direction. | LASSO, PLS [5] [2] | Data with a sufficient number of observations per variable. | Penalized regression (e.g., LASSO) introduces sparsity to prevent overfitting. More interpretable than deep learning. |

| Probabilistic Models | Uses graphical models to represent dependence between variables, estimating the most probable regulatory relationships. | (Various Bayesian approaches) [5] | Data where prior knowledge of network structure can be incorporated. | Often assumes gene expression follows a specific distribution (e.g., Gaussian), which may not hold true. |

| Dynamical Systems | Models gene expression as a system evolving over time using differential equations. | SCODE, SINGE, SSIO [7] [5] [2] | Time-series or pseudo-time-ordered gene expression data. | Captures kinetic parameters but is complex, less scalable, and often depends on prior knowledge. |

| Deep Learning | Uses neural networks (e.g., Autoencoders, GNNs) to learn complex, non-linear relationships from data. | DeepSEM, DAZZLE, DAG-GNN [7] [5] | Large-scale single-cell multi-omic datasets. | Highly flexible but requires large amounts of data and computational resources; less interpretable. |

| Message-Passing | Integrates multiple data sources (motif, PPI, expression) by iteratively passing information between networks. | PANDA, SCORPION [1] | Integrated multi-omic data (e.g., expression, motif, protein-protein interaction). | Generates directed, weighted networks. Effective but computationally intensive for large networks. |

Application Notes: From Single-Cell Data to Biological Insight

The advent of single-cell RNA-sequencing (scRNA-seq) has provided unprecedented resolution but also introduces challenges like data sparsity and "dropout" events [7]. The following protocols address these challenges using two of the highest-performing contemporary methods.

Protocol 1: GRN Inference with SCORPION for Population-Level Comparisons

SCORPION (Single-Cell Oriented Reconstruction of PANDA Individually Optimized gene regulatory Networks) is an R package that reconstructs comparable, fully connected, weighted, and directed transcriptome-wide GRNs suitable for population-level studies [1].

Experimental Workflow Overview

Detailed Methodology

- Input Data Preprocessing: Begin with a high-throughput scRNA-seq count matrix (cells x genes). Normalize and log-transform the data (e.g.,

log(x+1)) [7] [1]. - Data Coarse-graining (Desparsification): To mitigate sparsity, collapse a user-defined number (

k) of the most transcriptionally similar cells into "SuperCells" or "MetaCells." This step reduces technical noise and enables more robust correlation estimates [1]. - Construct Initial Networks: Build three unrefined networks as per the PANDA algorithm:

- Co-regulatory Network: Calculate pairwise gene-gene correlation from the coarse-grained expression matrix.

- Cooperativity Network: Download protein-protein interaction data for transcription factors from the STRING database.

- Regulatory Network: Compile a prior network of TF-to-gene interactions based on the presence of transcription factor binding motifs in gene promoters [1].

- Iterative Message Passing:

- Calculate the Responsibility Network (Rij), which represents information flowing from TF

ito genej, by computing the similarity between the cooperativity and regulatory networks. - Calculate the Availability Network (Aij), which represents information flowing from gene

jto TFi, by computing the similarity between the co-regulatory and regulatory networks. - Update the Regulatory Network by taking the average of the Responsibility and Availability networks and incorporating a small proportion (default α=0.1) of information from the other two initial networks.

- Update the co-regulatory and cooperativity networks based on the new regulatory network [1].

- Calculate the Responsibility Network (Rij), which represents information flowing from TF

- Convergence Check: Repeat Step 4 until the Hamming distance between successive regulatory networks falls below a defined threshold (default 0.001). The final output is a refined, sample-specific regulatory network matrix [1].

Protocol 2: GRN Inference with DAZZLE for Handling Dropout Noise

DAZZLE (Dropout Augmentation for Zero-inflated Learning Enhancement) is a neural network-based method that addresses the zero-inflation problem in scRNA-seq data using a novel regularization strategy called Dropout Augmentation (DA) [7].

Experimental Workflow Overview

Detailed Methodology

- Input Transformation: Start with a single-cell gene expression count matrix. Transform it using

log(x+1)to stabilize variance and avoid taking the logarithm of zero [7]. - Dropout Augmentation (DA): At each training iteration, randomly select a small proportion of non-zero expression values and artificially set them to zero. This simulates additional dropout noise, effectively regularizing the model and forcing it to become robust to missing data [7].

- Model Architecture and Training:

- DAZZLE uses a variational autoencoder (VAE) structure. The encoder processes the augmented input data to generate a latent representation

Z. - A noise classifier is trained concurrently to identify which zeros in the data are likely to be technical artifacts (the augmented zeros). This helps the decoder learn to rely less on these noisy data points.

- The decoder reconstructs the input expression data using the latent representation

Zand a learned, parameterized adjacency matrix A, which represents the regulatory interactions. - The model is trained to minimize reconstruction error. A sparsity constraint is applied to the adjacency matrix

Ato reflect the biological fact that GRNs are sparse [7] [8].

- DAZZLE uses a variational autoencoder (VAE) structure. The encoder processes the augmented input data to generate a latent representation

- Output: After training, the weights of the adjacency matrix

Aare extracted as the inferred GRN. The matrix is weighted and directed, indicating the strength and direction of regulation [7].

The Scientist's Toolkit: Research Reagent Solutions

The following table catalogues essential materials and computational tools referenced in the protocols for reconstructing and validating GRNs.

Table 2: Essential Research Reagents and Resources for GRN Analysis

| Item Name | Function/Application | Specifications & Notes |

|---|---|---|

| 10x Genomics Multiome | Simultaneously profiles single-cell gene expression (RNA) and chromatin accessibility (ATAC) within the same cell. | Provides matched multi-omic data, crucial for inferring causal TF-gene links by linking open chromatin to target genes [5]. |

| CRISPR Perturb-seq | Enables large-scale screening of gene function by coupling CRISPR knockouts with single-cell RNA sequencing. | Generates causal data for GRN validation by revealing transcriptome-wide effects of knocking out specific regulators [8]. |

| STRING Database | A database of known and predicted protein-protein interactions (PPIs). | Used in SCORPION to build the cooperativity network, informing on which TFs are likely to interact [1]. |

| Motif Databases (e.g., JASPAR) | Collections of transcription factor binding site profiles. | Used to construct the prior regulatory network by identifying potential TF-binding sites in gene promoters [1] [9]. |

| BEELINE | A computational framework and benchmark suite for systematically evaluating GRN inference algorithms. | Used to benchmark new methods against ground-truth synthetic and curated real networks [1]. |

| Augusta | An open-source Python package for GRN and Boolean Network inference from high-throughput gene expression data. | Useful for generating genome-wide models suitable for both static and dynamic analysis, even for non-model organisms [9]. |

Benchmarking Performance and Validation

Validating inferred GRNs remains a significant challenge. Benchmarking against synthetic data where the ground truth is known provides one objective measure of performance.

Table 3: Benchmarking Performance of GRN Inference Methods on Synthetic Data

| Method | Key Advantage | Precision | Recall | Stability/Robustness | Scalability |

|---|---|---|---|---|---|

| SCORPION | Integrates multiple data priors via message passing; excellent for population-level comparison. | High (18.75% higher than benchmark average) [1] | High (18.75% higher than benchmark average) [1] | High; robust to sparsity via coarse-graining. [1] | High; suitable for transcriptome-wide networks. [1] |

| DAZZLE | Specifically designed to handle zero-inflation in single-cell data via Dropout Augmentation. | High (superior to DeepSEM in benchmarks) [7] | High (superior to DeepSEM in benchmarks) [7] | High; shows increased training stability and robustness. [7] | High; reduced model size and computation time vs. DeepSEM. [7] |

| DeepSEM | Pioneering VAE-based approach for GRN inference. | Moderate | Moderate | Moderate; prone to overfitting dropout noise. [7] | High |

| PPCOR & PIDC | Correlation and information-theoretic approaches. | Moderate (similar to SCORPION on small nets) [1] | Moderate (similar to SCORPION on small nets) [1] | N/A | Limited in transcriptome-wide scenarios. [1] |

Biological Validation: Computational benchmarks must be supplemented with biological validation. A powerful approach is to use perturbation data. For example, after inferring a GRN, researchers can experimentally perturb key transcription factors (e.g., via CRISPR) and measure whether the expression changes in predicted target genes align with the model's predictions [1] [8]. Furthermore, comparing networks across conditions, such as wild-type versus mutant cells or healthy versus diseased tissue, can reveal differentially active regulatory pathways that provide mechanistic insights into phenotypes [1].

The journey from statistical inference to biological meaning in GRN analysis is complex but increasingly tractable. The methods detailed here, such as SCORPION and DAZZLE, exemplify the sophisticated approaches being developed to overcome the challenges of single-cell data sparsity and cellular heterogeneity. By following standardized protocols, leveraging appropriate reagent solutions, and employing rigorous benchmarking and validation, researchers can confidently extract biologically meaningful insights from GRN models. As these tools continue to evolve and integrate more diverse data types, they will profoundly deepen our understanding of developmental biology and provide a robust foundation for identifying novel therapeutic targets in human disease.

A fundamental objective in developmental biology is to elucidate the mechanisms that translate static genomic information into dynamic, complex organisms. This genotype-to-phenotype mapping represents one of the most significant challenges in modern biology. Gene Regulatory Networks (GRNs) have emerged as the crucial conceptual and mechanistic framework that occupies the phenotypic bottleneck—the strategic interface where genomic information is processed and filtered to execute developmental programs. A GRN is a graph-level representation comprising genes (nodes) and their regulatory interactions (edges), primarily governed by transcription factors (TFs) that bind to cis-regulatory elements to control target gene expression [10]. These networks are not merely collections of independent gene interactions but are instead complex, hierarchical systems that exhibit emergent properties such as robustness and adaptability [11] [12].

The architecture of GRNs enables them to function as computational devices that interpret genomic sequences and environmental cues to direct developmental outcomes. During development, the expression of specific genes in distinct cells leads to cellular differentiation and tissue patterning, processes that are remarkably robust against genetic and environmental perturbations [11]. This robustness is exemplified by developmental genes, such as the Hox genes in Drosophila, which are expressed in precise patterns that provide positional information and segment identity to the developing embryo [11]. The GRN topology evolves through processes of duplication, mutation, and selection, giving rise to novel regulatory mechanisms that drive evolutionary change [12]. The characterization of GRNs therefore provides not only insights into developmental processes but also a window into evolutionary dynamics, including how phenotypic plasticity can facilitate genetic accommodation and assimilation [13].

Theoretical Framework: GRN Architecture and Phenotypic Control

Network Topology and Information Processing

GRNs possess distinct architectural features that determine their functional capabilities and phenotypic influence. These networks are bipartite and directional, consisting of two types of nodes—transcription factors and their target genes—connected by directed edges representing regulatory relationships [11]. The topology of GRNs is non-random, characterized by specific connectivity patterns including hubs (highly connected nodes) and modular organization [11]. Key topological metrics include:

- Node Degree: The number of relationships a node engages in, differentiated as:

- In-degree: Number of TFs regulating a gene

- Out-degree: Number of genes regulated by a TF

- Flux Capacity: The product of a regulator's in-degree and out-degree, representing its potential information flow

- Betweenness: The number of shortest paths passing through a node, indicating its centrality in connecting network modules [11]

The regulatory logic embedded within GRN architecture enables them to perform sophisticated information processing. Networks can exhibit both combinatorial control (multiple TFs regulating a single target gene) and pleiotropic regulation (single TF regulating multiple targets) [14]. This architecture allows GRNs to function as biological computational devices that integrate diverse inputs and generate coordinated transcriptional outputs, ultimately determining cellular states and developmental trajectories.

Mechanisms of Phenotypic Robustness and Variability

The interplay between robustness and variability in developmental outcomes is directly governed by GRN properties. Biological processes can be deterministic and robust, as seen in developmental patterning, or stochastic and variable, as observed in stress responses [11]. This balance is mediated at the gene expression level through several mechanisms:

- Mutational Robustness: The ability of GRNs to buffer against genetic perturbations, thereby maintaining phenotypic stability despite genetic variation [15]

- Gene Expression Noise: Stochastic fluctuations in gene expression that can generate phenotypic variability within cell populations, serving as a substrate for adaptation [15]

- Environmental Responsiveness: Network capacity to reconfigure gene expression in response to external cues, enabling phenotypic plasticity [13]

The binary GRN model developed by Wagner has demonstrated that both mutational robustness and gene expression noise can promote phenotypic heterogeneity under certain conditions, with population bottlenecks increasing the number of potential "generator" genes that can substantially induce population fitness when stimulated by mutations [15]. This illustrates how GRN properties directly shape evolutionary potential by modulating phenotypic variability.

Table 1: Key Properties of GRNs Influencing Developmental Outcomes

| GRN Property | Functional Role | Impact on Phenotype |

|---|---|---|

| Modularity | Groups of highly interconnected nodes performing specific functions | Enables coordinated execution of developmental programs |

| Robustness | Buffer against genetic and environmental perturbations | Ensures reproducible developmental outcomes |

| Adaptability | Capacity for network reconfiguration | Facilitates evolutionary change and environmental response |

| Hierarchy | Multi-layered control architecture | Establishes developmental progression and timing |

| Stochasticity | Controlled noise in gene expression | Generates phenotypic diversity within populations |

Methodological Approaches: Inferring and Analyzing GRNs

Computational Framework for GRN Inference

The reconstruction of GRNs from experimental data represents a significant computational challenge that has evolved substantially with advances in sequencing technologies and machine learning. Modern GRN inference methods can be broadly categorized based on their learning paradigms and data requirements:

- Supervised Learning: Trained on labeled datasets with known regulatory interactions to predict novel relationships (e.g., GENIE3, DeepSEM) [12]

- Unsupervised Learning: Identifies regulatory patterns from unlabeled gene expression data (e.g., ARACNE, LASSO) [14] [12]

- Semi-Supervised Learning: Combines limited labeled data with larger unlabeled datasets (e.g., GRGNN) [12]

- Contrastive Learning: Leverages similarities and differences in data representations (e.g., GCLink, DeepMCL) [12]

Recent advances have increasingly incorporated deep learning architectures including convolutional neural networks (CNNs), graph neural networks (GNNs), and transformer models to capture the complex, non-linear relationships within regulatory networks [12] [10]. The selection of appropriate inference methods depends on data availability, biological context, and specific research questions, with integration of multiple approaches often yielding the most robust results.

Experimental Protocol: GRN Inference from Single-Cell Multiome Data

The following protocol outlines the procedure for inferring cell type-specific GRNs from single-cell multiome data using the LINGER framework, which demonstrates superior performance through integration of atlas-scale external data [16].

Protocol 1: GRN Inference Using LINGER

Input Requirements:

- Single-cell multiome data (paired gene expression and chromatin accessibility)

- Cell type annotations

- External bulk reference data (e.g., from ENCODE)

- Transcription factor motif database

Procedure:

- Data Preprocessing

- Quality control of single-cell multiome data

- Normalization of gene expression and chromatin accessibility matrices

- Cell type identification and annotation

- Feature selection for highly variable genes and accessible regions

Model Pre-training with External Data

- Initialize neural network architecture with:

- Input layer: TF expression and RE accessibility

- Hidden layer: Regulatory modules guided by TF-RE motif matching

- Output layer: Target gene expression

- Pre-train model on external bulk data (BulkNN) to learn general regulatory principles

- Initialize neural network architecture with:

Model Refinement with Single-Cell Data

- Apply elastic weight consolidation (EWC) loss to retain knowledge from bulk data

- Fine-tune model parameters using single-cell multiome data

- Incorporate manifold regularization using TF motif information

GRN Extraction and Validation

- Calculate regulatory strengths using Shapley values to estimate feature contributions

- Extract three interaction types:

- trans-regulation (TF-TG interactions)

- cis-regulation (RE-TG interactions)

- TF-binding (TF-RE interactions)

- Validate inferences against orthogonal data (ChIP-seq, eQTL studies)

Cell Type-Specific Network Construction

- Generate population-level GRN from general model

- Derive cell type-specific GRNs using cell type expression profiles

- Construct cell-level GRNs for high-resolution analysis [16]

Troubleshooting Tips:

- Low prediction accuracy may indicate insufficient external data representation

- Poor cell type specification may require refinement of annotation

- Regulatory edge validation should prioritize high-confidence experimental datasets

Experimental Protocol: GRN Inference from scRNA-seq Data Using Graph Representation Learning

For studies with only single-cell RNA-seq data, the following protocol implements GRLGRN (Graph Representation Learning for Gene Regulatory Networks), which leverages graph transformer networks to infer regulatory relationships.

Protocol 2: GRN Inference from scRNA-seq Data Using GRLGRN

Input Requirements:

- scRNA-seq count matrix

- Prior GRN knowledge (optional but recommended)

- Ground truth network for validation (e.g., from STRING, ChIP-seq)

Procedure:

Data Preparation

- Process scRNA-seq data using standard normalization methods

- Handle technical noise and data dropout appropriately

- Select variable genes and relevant transcription factors

Graph Construction and Feature Extraction

- Construct prior GRN graph (\mathcal{G} = (\mathcal{V},\mathcal{E})) from available knowledge

- Formulate five directed subgraphs representing different regulatory relationships:

- (\mathcal{G}1): TF to target gene regulations

- (\mathcal{G}2): Reverse directions of (\mathcal{G}1)

- (\mathcal{G}3): TF-TF regulatory relationships

- (\mathcal{G}4): Reverse directions of (\mathcal{G}3)

- (\mathcal{G}_5): Self-connected gene graph

- Concatenate adjacency matrices (\varvec{A}_{s}\in {0, 1}^{5\times N\times N}) where N is gene count

Graph Transformer Processing

- Extract implicit links using graph transformer network

- Generate tensors (\varvec{Q}^{(1)}) and (\varvec{Q}^{(2)}\in \mathbb{R}^{B\times N\times N}) through parameterized layers

- Apply multi-channel processing to capture diverse regulatory relationships

Feature Enhancement and Model Training

- Implement Convolutional Block Attention Module (CBAM) to refine gene features

- Incorporate graph contrastive learning regularization to prevent over-smoothing

- Train model using automatic weighted loss function

- Validate using ground truth networks and benchmark against established methods

Network Visualization and Interpretation

- Generate GRN visualizations highlighting hub genes and key regulatory modules

- Identify implicit links not present in prior knowledge

- Perform functional enrichment analysis of regulatory modules [10]

Validation Metrics:

- Calculate AUROC (Area Under Receiver Operating Characteristic) and AUPRC (Area Under Precision-Recall Curve) against ground truth

- Compare performance with established benchmarks (e.g., GENIE3, GRNBoost2)

- Assess biological relevance through functional enrichment and literature validation

Table 2: Comparison of Advanced GRN Inference Methods

| Method | Learning Type | Data Input | Key Innovation | Performance Advantage |

|---|---|---|---|---|

| LINGER [16] | Supervised | Single-cell multiome + external bulk | Lifelong learning with external data | 4-7x relative increase in accuracy |

| GRLGRN [10] | Semi-supervised | scRNA-seq + prior GRN | Graph transformer with implicit link extraction | 7.3% AUROC and 30.7% AUPRC improvement |

| DeepIMAGER [12] | Supervised | Single-cell | CNN architecture | High accuracy on image-like expression representations |

| GRN-VAE [12] | Unsupervised | Single-cell | Variational autoencoder | Effective capture of non-linear relationships |

| STGRNS [12] | Supervised | Single-cell | Transformer model | Transfer learning capability |

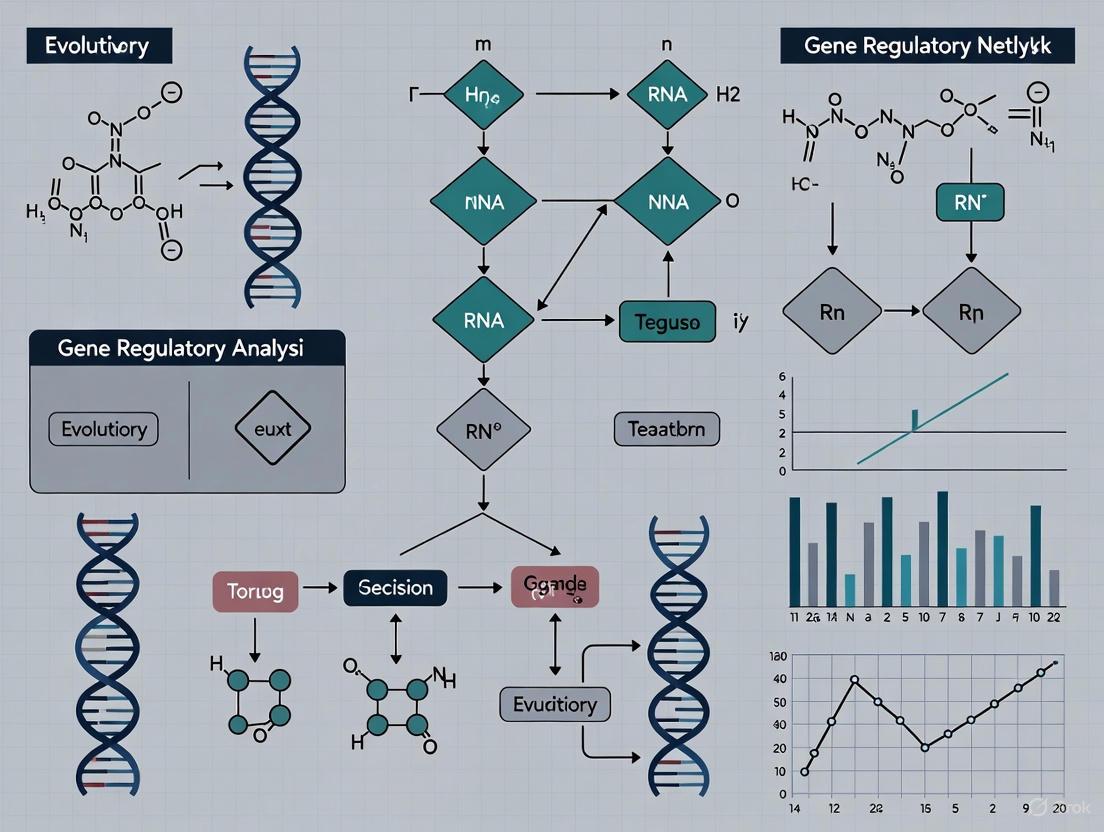

Visualization: GRN Architecture and Inference Workflows

GRN Topological Features and Regulatory Logic

Diagram 1: GRN Architecture and Key Components. This diagram illustrates fundamental GRN topological features including TF hubs (high out-degree), gene hubs (high in-degree), and different regulatory relationship types. The architecture demonstrates how combinatorial control and network hierarchy establish the information processing capacity of GRNs.

LINGER Workflow for GRN Inference from Multiome Data

Diagram 2: LINGER Workflow for Multiome Data Analysis. This diagram outlines the key steps in the LINGER framework, highlighting the integration of external bulk data through lifelong learning, refinement with single-cell data using elastic weight consolidation, and comprehensive GRN extraction incorporating multiple regulatory interaction types.

Table 3: Essential Research Reagents and Computational Tools for GRN Analysis

| Category | Resource/Reagent | Specification | Application in GRN Research |

|---|---|---|---|

| Experimental Methods | Chromatin Immunoprecipitation (ChIP) | Protein-specific antibodies | Mapping TF binding sites [11] |

| scRNA-seq | 10X Genomics, Smart-seq2 | Cell type-specific expression profiling [10] | |

| scATAC-seq | 10X Multiome, SHARE-seq | Chromatin accessibility at single-cell resolution [16] | |

| Yeast One-Hybrid (Y1H) | Gene-centered screening | Identification of TF-target interactions [11] | |

| Computational Tools | LINGER | Python implementation | GRN inference from multiome data [16] |

| GRLGRN | Graph transformer network | GRN inference from scRNA-seq data [10] | |

| GENIE3 | Random forest-based | Supervised GRN inference [12] | |

| Cytoscape | Network visualization platform | GRN visualization and analysis [11] | |

| Reference Data | ENCODE | Bulk multiomics reference | External data for model pre-training [16] |

| BEELINE | Benchmarking platform | Standardized evaluation of GRN methods [10] | |

| DREAM Challenges | Community benchmarking | GRN inference assessment [14] [12] |

Applications and Future Directions

The application of GRN analysis to developmental biology has yielded significant insights into the mechanisms governing cellular differentiation, tissue patterning, and phenotypic variation. The cichlid fish Astatoreochromis alluaudi provides a compelling example of how GRNs mediate diet-induced phenotypic plasticity, where alternative pharyngeal jaw morphologies emerge in response to different food sources through modifications in gene regulatory interactions [13]. Such studies demonstrate how environmentally sensitive GRNs can facilitate rapid phenotypic adaptation.

In medical research, GRN analysis has profound implications for understanding disease mechanisms and developing therapeutic interventions. Intra-tumor heterogeneity, a major challenge in cancer therapy, arises through evolutionary processes in cellular GRNs that increase phenotypic variability [15]. Reconstruction of GRNs from patient samples can identify master regulator TFs that drive disease progression, potentially revealing novel therapeutic targets [10] [16]. The LINGER framework has demonstrated particular utility in enhancing the interpretation of disease-associated variants from genome-wide association studies by placing them within a regulatory context [16].

Future methodological developments will likely focus on enhancing multi-omics integration, improving temporal resolution of regulatory dynamics, and incorporating spatial information into GRN models. The field will also benefit from standardized benchmarking resources like BEELINE [10] and community challenges that establish performance standards for GRN inference methods. As single-cell technologies continue to advance, the integration of epigenomic, proteomic, and spatial data will enable increasingly comprehensive models of gene regulation that more fully capture the complexity of developmental processes.

The conceptual framework of GRNs as phenotypic bottlenecks provides a powerful paradigm for understanding how biological information flows from genome to phenome. By occupying this strategic interface, GRNs transform linear genetic information into dynamic, multidimensional developmental programs. Their architectural properties—modularity, hierarchy, robustness, and adaptability—enable the precise execution of complex developmental processes while maintaining evolutionary flexibility. Continued refinement of methods for GRN reconstruction and analysis will undoubtedly yield deeper insights into developmental mechanisms and their dysregulation in disease.

Gene regulatory networks (GRNs) form the complex control system that directs development, cellular differentiation, and organismal response to environmental cues [12] [14]. At the heart of these networks lie two core components: cis-regulatory elements (CREs) and transcription factors (TFs). CREs are non-coding DNA sequences that regulate the transcription of neighboring genes, while TFs are proteins that bind to these elements to activate or repress gene expression [17]. The interaction between CREs and TFs establishes the regulatory logic that coordinates spatial and temporal gene expression patterns during embryonic development [18] [19]. Understanding this interplay is crucial for deciphering the molecular basis of development, disease mechanisms, and phenotypic diversity across species [20] [21].

Recent technological advances in high-throughput sequencing, single-cell genomics, and machine learning have revolutionized our ability to map and analyze GRNs at unprecedented resolution [20] [12]. This application note provides researchers with current methodologies and analytical frameworks for studying CREs and TFs in developmental contexts, with practical protocols and resources for implementing these approaches in experimental designs.

Core Concepts and Definitions

Cis-Regulatory Elements (CREs)

CREs are functional non-coding DNA regions that typically range from 100-1000 base pairs in length and are located on the same DNA molecule as the genes they regulate [17]. They can be categorized into several functional classes:

- Promoters: Located proximal to the transcription start site (TSS), promoters contain core elements where the transcription machinery assembles [17].

- Enhancers: Distal regulatory elements that enhance transcription of target genes, functioning independently of orientation and position relative to the promoter [17].

- Silencers: Elements that repress transcription when bound by appropriate TF complexes [17].

- Insulators: Elements that block enhancer-promoter interactions or establish boundary domains between chromatin regions [17].

These elements frequently occur in clustered configurations termed "cis-regulatory modules" that integrate multiple TF inputs to produce specific transcriptional outputs [17]. During evolution, mutations in CRE sequences have profound effects on phenotypic diversity by altering spatiotemporal gene expression patterns without changing protein-coding sequences [18] [17].

Transcription Factors (TFs)

TFs are proteins with sequence-specific DNA-binding domains that recognize short, degenerate DNA motifs within CREs [20]. The human genome encodes over 1,000 TFs, which can be classified into families based on their DNA-binding domains, such as zinc finger (zf-C2H2), homeobox, and HLH domains [20] [19]. TFs exhibit combinatorial binding preferences, where complex interactions between multiple TFs at cis-regulatory modules determine the final transcriptional output [20] [17].

Table 1: Major Transcription Factor Families and Their Roles in Development

| TF Family | DNA-Binding Domain | Representative Members | Developmental Roles |

|---|---|---|---|

| zf-C2H2 | Zinc finger | ZNF480, ZNF581 | Early embryogenesis, stem cell maintenance [22] |

| Homeobox | Homeodomain | POU5F1 (OCT4), HOXD13 | Anterior-posterior patterning, cell fate specification [22] [23] |

| HLH | Helix-loop-helix | NHLH2, NEUROG1 | Neurogenesis, mesoderm formation [23] |

| HMG | High mobility group | SOX10, SOX2 | Neural crest development, pluripotency [22] [23] |

Experimental Methods for Mapping CRE-TF Interactions

Protocol: Mapping Genome-wide TF Binding with ChIP-Seq

Principle: Chromatin Immunoprecipitation followed by sequencing (ChIP-seq) identifies genome-wide binding sites for a specific transcription factor by crosslinking proteins to DNA, immunoprecipitating with TF-specific antibodies, and sequencing the bound DNA fragments [20].

Reagents and Equipment:

- Crosslinking solution (1% formaldehyde)

- Cell lysis buffer

- Sonication device (e.g., Bioruptor or Covaris)

- Antibody against target transcription factor

- Protein A/G magnetic beads

- DNA purification kit

- High-throughput sequencer

Procedure:

- Crosslinking: Treat cells with 1% formaldehyde for 10 minutes at room temperature to crosslink proteins to DNA.

- Cell Lysis: Lyse cells using ice-cold lysis buffer containing protease inhibitors.

- Chromatin Shearing: Sonicate chromatin to fragment DNA to 200-500 bp fragments.

- Immunoprecipitation: Incubate chromatin with TF-specific antibody overnight at 4°C, then add Protein A/G magnetic beads for 2 hours.

- Washing and Elution: Wash beads sequentially with low-salt, high-salt, and LiCl buffers, then elute crosslinked complexes.

- Reverse Crosslinks: Incubate eluates at 65°C overnight with NaCl to reverse crosslinks.

- DNA Purification: Treat with Proteinase K, then purify DNA using silica membrane columns.

- Library Preparation and Sequencing: Prepare sequencing libraries using commercial kits and sequence on appropriate platform.

Analysis: Align sequences to reference genome, call peaks using MACS2 [18], and identify enriched motifs using tools like FIMO [18] or MEME.

Protocol: Profiling Chromatin Accessibility with ATAC-Seq

Principle: The Assay for Transposase-Accessible Chromatin using sequencing (ATAC-seq) identifies genomically accessible regions using hyperactive Tn5 transposase that preferentially inserts sequencing adapters into open chromatin regions [18].

Reagents and Equipment:

- Nuclei isolation buffer

- Tn5 transposase (commercially available)

- DNA purification beads

- Library amplification reagents

- High-throughput sequencer

Procedure:

- Nuclei Isolation: Harvest cells and isolate nuclei using ice-cold lysis buffer.

- Tagmentation Reaction: Incubate nuclei with Tn5 transposase for 30 minutes at 37°C.

- DNA Purification: Purify tagmented DNA using SPRI beads.

- Library Amplification: Amplify libraries with barcoded primers for 10-12 cycles.

- Size Selection: Clean up libraries with SPRI beads to remove large fragments.

- Quality Control and Sequencing: Assess library quality and sequence on appropriate platform.

Analysis: Process data through alignment, peak calling, and motif analysis to identify putative CREs and bound TFs.

Protocol: Functional Screening with Massively Parallel Reporter Assays (MPRAs)

Principle: MPRAs enable high-throughput functional testing of thousands of candidate CRE sequences by cloning them into reporter constructs, introducing them into cells, and measuring their transcriptional activity via sequencing [20] [21].

Reagents and Equipment:

- Oligonucleotide library containing candidate CREs

- Reporter vector with minimal promoter and unique barcode

- Gibson Assembly or Golden Gate cloning reagents

- Mammalian cell line relevant to developmental process

- Transfection reagent

- RNA and DNA extraction kits

- High-throughput sequencer

Procedure:

- Library Design: Design oligonucleotides containing candidate CRE sequences with flanking homology arms for cloning.

- Library Cloning: Use Gibson Assembly to clone CRE library into reporter vector upstream of a minimal promoter and unique barcode.

- Transformation: Transform assembled library into competent E. coli and harvest plasmid DNA.

- Cell Transfection: Transfect reporter library into target cell type (e.g., stem cells or differentiated progenitors).

- RNA/DNA Harvest: Extract total RNA and genomic DNA 48 hours post-transfection.

- Library Preparation and Sequencing: Convert RNA to cDNA and amplify barcode regions from both cDNA and DNA samples for sequencing.

- Analysis: Calculate enrichment of barcodes in RNA compared to DNA to determine CRE activity.

Computational Analysis of GRNs in Development

Machine Learning Approaches for GRN Inference

Machine learning has become indispensable for reconstructing GRNs from omics data [12] [14]. These methods can be categorized into several paradigms:

Supervised Learning: Utilizes known TF-target interactions to train models that predict novel regulatory relationships. Methods include:

- Random Forest-based: GENIE3 and dynGENIE3 [12]

- Deep Learning-based: DeepSEM, GRNFormer, and STGRNs using transformer architectures [12]

Unsupervised Learning: Identifies regulatory relationships without prior knowledge using:

- Mutual Information: ARACNE and CLR algorithms [20] [12]

- Regression Methods: LASSO and linear regression [12] [23]

Single-Cell GRN Inference: Specialized methods like DeepIMAGER and RSNET leverage single-cell RNA-seq data to reconstruct cell-type-specific GRNs [12].

Table 2: Performance Comparison of GRN Inference Methods Across Developmental Systems

| Method | Learning Type | Data Input | Accuracy | Developmental Applications |

|---|---|---|---|---|

| GENIE3 | Supervised | Bulk RNA-seq | Moderate | Early embryonic patterning [12] |

| DeepSEM | Supervised (DL) | Single-cell RNA-seq | High | Cell fate transitions [12] |

| ARACNE | Unsupervised | Bulk RNA-seq | Moderate | Tissue-specific regulation [23] |

| GRN-VAE | Unsupervised (DL) | Single-cell RNA-seq | High | Neural development [12] |

| LASSO | Unsupervised | Bulk RNA-seq | Moderate | Glioma progression [23] |

Protocol: Constructing GRNs from Single-Cell RNA-seq Data

Principle: This protocol details GRN inference from single-cell transcriptomic data using the RTN package in R, which combines mutual information and bootstrap resampling to identify robust TF-target relationships [23].

Software Requirements:

- R programming environment

- RTN package from Bioconductor

- Single-cell RNA-seq data (count matrix)

Procedure:

- Data Preprocessing:

- Load single-cell RNA-seq count matrix

- Filter low-quality cells and genes

- Normalize counts using SCTransform or log-normalization

Network Reconstruction:

- Create TNI object:

tni <- TNIconstructor(exprData, regulatoryElements) - Compute mutual information:

tni <- tniPermutation(tni) - Bootstrap analysis:

tni <- tniBootstrap(tni) - Apply ARACNE algorithm:

tni <- tniDpiFilter(tni)

- Create TNI object:

Regulon Analysis:

- Create TNA object:

tna <- TNI2TNA(tni, phenotype) - Compute regulon activity:

tna <- tnaGSEA2(tna) - Perform survival association (if applicable):

tna <- tnaSurvival(tna)

- Create TNA object:

Visualization and Interpretation:

- Generate hierarchical clustering of regulons

- Plot regulon activity across cell types or conditions

- Identify master regulator TFs driving developmental transitions

Application Note: This approach successfully identified SOX10 as a key regulator in glioma pathogenesis and revealed distinct regulatory networks associated with neural development [23].

Developmental Dynamics of CREs and TFs

Embryonic Expression Patterns

Systematic characterization of TF expression during embryogenesis reveals critical insights into developmental GRNs. A comprehensive study in Drosophila profiled 708 TFs across embryonic stages, finding that over 96% are expressed during embryogenesis, with more than half showing specific expression in the developing central nervous system [19]. TFs are enriched in early embryogenesis and exhibit dynamic spatiotemporal patterns, with many showing multi-organ expression while approximately 21% demonstrate single-organ specificity [19].

In mammalian development, studies of human biparental and uniparental embryos revealed distinct TF expression modules, including maternal RNA degradation, minor zygotic genome activation (ZGA), major ZGA, and mid-preimplantation genome activation patterns [22]. Key TFs such as POU5F1 (OCT4), ZNF480, and ZNF581 serve as hub regulators in early embryonic GRNs [22].

Evolutionary Conservation and Divergence

Comparative analysis of CREs and TF binding sites across species reveals both conserved and species-specific regulatory features. Cross-species studies of mammals, fish, and chicken demonstrated that the distance between TF binding site-clustered regions (TFCRs) and promoters decreases during embryonic development, while regulatory complexity increases from simpler to more complex organisms [18]. Machine learning models identified the TFCR-promoter distance as the most significant factor influencing gene expression regulation across species [18].

The Scientist's Toolkit: Essential Research Reagents

Table 3: Key Research Reagents for Studying CREs and TFs

| Reagent/Resource | Function | Example Applications | Key References |

|---|---|---|---|

| CIS-BP Database | Catalog of TF motif specificities | Identifying putative TF binding sites | [18] |

| JASPAR Database | Curated collection of TF binding profiles | Motif enrichment analysis | [18] |

| ATAC-seq Kit | Profiling chromatin accessibility | Mapping CREs in rare cell populations | [18] |

| ChIP-seq Grade Antibodies | Immunoprecipitation of specific TFs | Genome-wide TF binding mapping | [20] |

| CRISPR Activation/Inhibition | Perturbation of CRE function | Functional validation of enhancers | [20] |

| MPRA Library Platforms | High-throughput CRE screening | Testing thousands of sequences in parallel | [20] [21] |

Regulatory Logic and Grammer Visualization

Diagram 1: Combinatorial Logic of CRE-TF Interactions. Transcription factors integrate signaling inputs and bind cooperatively or competitively to cis-regulatory elements to control RNA polymerase recruitment and target gene transcription.

The integrated analysis of cis-regulatory elements and transcription factors provides fundamental insights into the regulatory code governing developmental processes. The experimental and computational approaches outlined in this application note enable researchers to systematically map GRN architecture and dynamics across diverse developmental contexts. As single-cell technologies and deep learning methods continue to advance, they promise to further unravel the complex regulatory logic that transforms genetic information into organized cellular systems and morphological structures. These advances have profound implications for understanding developmental disorders, evolutionary processes, and designing targeted therapeutic interventions.

The purple sea urchin, Strongylocentrotus purpuratus, has served as a foundational model organism in developmental biology for over 150 years, providing unique insights into the gene regulatory networks (GRNs) that control embryogenesis [24]. As echinoderms, sea urchins occupy a critical phylogenetic position as a sister group to chordates, having diverged from the lineage leading to humans before the Cambrian period over 500 million years ago [24]. This evolutionary relationship makes them exceptionally valuable for comparative studies aimed at understanding the evolution of developmental mechanisms. Gene regulatory networks represent complex systems of genes, transcription factors, and signaling molecules that interact to control gene expression during development, differentiation, and cellular responses to environmental cues [25] [12]. The sea urchin model has been instrumental in deciphering the structure, logic, and evolution of these networks, particularly through the detailed experimental analysis of its endomesoderm specification network [26].

The sea urchin genome, sequenced to approximately a quarter the size of the human genome but with a comparable number of genes, reveals remarkable conservation of developmental pathways and gene families relevant to human biology [24]. For instance, the sea urchin genome contains orthologs of numerous human disease-associated genes, including 65 genes of the ATP-binding cassette transporter superfamily (compared to 48 in humans), mutations in which can cause degenerative, metabolic, and neurological disorders [24]. This conservation extends to core signaling pathways—Notch, Wnt, and Hedgehog—that control fundamental processes in development and are frequently dysregulated in human diseases, including cancer [24]. The experimental advantages of sea urchins, including ease of laboratory propagation, synchronous embryo cultures, transparent embryos, and rapid embryogenesis, have enabled the construction of detailed, experimentally validated GRN models that explain cell fate specification and differentiation at a system level [26] [24].

Table 1: Key Advantages of Sea Urchin Models for GRN Research

| Feature | Application in GRN Research |

|---|---|

| Transparent embryos | Enables real-time visualization of developmental processes and gene expression patterns. |

| Synchronous development | Facilitates precise temporal analysis of gene activation and regulatory cascades. |

| Experimental accessibility | Allows for microsurgical manipulations, micromere isolations, and perturbation experiments. |

| Sequenced genome | Permits cross-species comparative genomics and identification of conserved regulatory elements. |

| Deuterostome phylogeny | Provides evolutionary insights relevant to chordates and humans. |

Evolutionary Rearrangements in Genomic Architecture

Comparative analysis of mitochondrial DNA (mtDNA) between sea urchins and humans provides a clear example of how genomic architecture evolves over deep time. A foundational study comparing the mtDNA of Strongylocentrotus franciscanus (sea urchin) and Homo sapiens (human) revealed a significant evolutionary rearrangement in gene order [27]. Specifically, the genes encoding 16S rRNA and cytochrome oxidase subunit I are directly adjacent in sea urchin mtDNA, whereas in human and other mammalian mtDNAs, these two genes are separated by a region containing unidentified reading frames 1 and 2 [27]. Despite this difference in physical gene order, the study found that gene polarity—the direction of transcription—has been conserved.

This rearrangement is interpreted as an event that occurred in the sea urchin lineage after its last common ancestor with mammals [27]. This finding highlights a fundamental principle of GRN evolution: the regulatory logic and relationships (the "software") can be maintained even when the physical arrangement of genetic elements (the "hardware") changes. Such comparative genomic studies establish a baseline for understanding the rate and nature of genomic change and provide a critical context for interpreting differences in the structure of nuclear-encoded gene regulatory networks between species.

The Sea Urchin Endomesoderm GRN: A Model of Dynamic Control

The gene regulatory network controlling endomesoderm specification in the sea urchin embryo represents one of the most completely understood developmental GRNs, providing a system-level explanation of how dynamic spatial and temporal patterns of gene expression are controlled [26]. This network is encoded in the genomic DNA via cis-regulatory modules—clusters of transcription factor binding sites that control gene expression. These modules execute logical operations (AND, OR, NOT) on their inputs to determine when and where genes are activated [26].

Circuitry for Dynamic Patterning: The Wnt8-Delta Pathway

A prime example of the explanatory power of this GRN is the subcircuit that controls the dynamic, non-overlapping expression of the signaling ligands Wnt8 and Delta, which is crucial for segreg the mesodermal and endodermal territories [26]. The following diagram illustrates the core regulatory logic of this dynamic process:

Figure 1: GRN Circuit for Wnt8 and Delta Segregation. This subcircuit shows the regulatory interactions that lead to the exclusive expression of Wnt8 and Delta in different cell tiers. The dashed line represents intercellular signaling.

The execution of this regulatory program in space and time proceeds through several phases. Initially, at approximately 6 hours post-fertilization (hpf), both wnt8 and delta are co-expressed in the micromeres. The wnt8 expression expands vegetally due to a positive feedback loop with nuclear β-catenin, while blimp1 expression clears itself through auto-repression [26]. By 15 hpf, blimp1 represses hesc in the micromeres, allowing delta expression to persist there even after the initial activator pmar1 is turned off. Consequently, wnt8 and delta expression become segregated: delta remains in the skeletogenic micromere descendants, while wnt8 is active in the adjacent non-skeletogenic mesoderm (NSM) precursors [26]. This precise spatiotemporal patterning is fundamental for the correct specification of mesodermal and endodermal cell fates.

Protocol: Perturbation Analysis of the Wnt8/Delta Circuit

Objective: To experimentally validate the regulatory interactions within the Wnt8/Delta subcircuit by perturbing key nodes and observing the resulting expression patterns.

Materials:

- Sea urchin gametes (S. purpuratus)

- Morpholino oligonucleotides targeting

blimp1,pmar1, andhescmRNA for knockdown experiments. - mRNA for microinjection for targeted gene overexpression.

- In situ hybridization reagents for visualizing

wnt8anddeltamRNA spatial patterns. - Antibodies for detecting Wnt8 and Delta proteins (if available).

Method:

- Embryo Preparation: Obtain gametes from adult sea urchins by KCl injection. Fertilize eggs in filtered seawater and culture at 15°C to obtain synchronized embryos [26].

- Experimental Perturbation: At the 1-cell stage, microinject fertilized eggs with either:

- Knockdown group: Antisense morpholinos against

blimp1,pmar1, orhesc. - Overexpression group: Synthetic mRNA for

blimp1. - Control group: Standard control morpholino or mRNA.

- Knockdown group: Antisense morpholinos against

- Fixation and Staining: At key developmental time points (e.g., 7 hpf, 12 hpf, 18 hpf), fix batches of embryos. Perform two-color fluorescent in situ hybridization to detect

wnt8anddeltatranscripts simultaneously. - Imaging and Analysis: Capture high-resolution images of stained embryos using a confocal microscope. Analyze the expression domains of

wnt8anddeltaacross the different experimental conditions compared to controls.

Expected Outcomes:

blimp1knockdown should result in the loss ofwnt8expression and a failure to activatedeltain the micromeres.hescknockdown should lead to ectopicdeltaexpression outside the micromere lineage.blimp1overexpression should prematurely represswnt8and expand thedeltaexpression domain.

This protocol allows for a functional test of the GRN model, where the predicted changes in expression patterns upon node perturbation serve to validate the proposed regulatory linkages [26].

Computational Inference of GRNs: From Data to Models

The detailed, experimentally derived sea urchin GRN provides a biological benchmark for developing and validating computational methods that infer network structures from genomic data. Inferring GRNs computationally involves identifying regulatory interactions between transcription factors and their target genes from high-throughput data, such as transcriptomics (bulk or single-cell RNA-seq) and epigenomics (ChIP-seq, ATAC-seq) [12] [10].

Properties and Machine Learning Approaches

Biological GRNs exhibit specific structural properties that computational models aim to capture. They are sparse (each gene has few direct regulators), contain directed edges and feedback loops, have asymmetric, heavy-tailed distributions of in- and out-degree (reflecting the presence of "master regulators"), and are modular, with genes groupable into functional units [28]. Modern machine learning methods for GRN inference have evolved from classical algorithms (e.g., GENIE3, which uses Random Forests) to sophisticated deep learning models [12]. These can be categorized by their learning paradigm:

- Supervised Learning: Trained on datasets with known regulatory interactions to predict new targets (e.g., DeepSEM, STGRNS).

- Unsupervised Learning: Identify patterns and relationships without pre-labeled data (e.g., ARACNE, which uses information theory).

- Semi-Supervised and Contrastive Learning: Leverage both labeled and unlabeled data or use contrastive objectives to improve inference (e.g., GRGNN, GCLink) [12].

A state-of-the-art method, GRLGRN, exemplifies the deep learning approach. It uses a graph transformer network to extract implicit links from a prior GRN and a matrix of single-cell gene expression profiles. It then employs attention mechanisms to refine gene features (embeddings) and uses these to predict regulatory relationships with high accuracy, demonstrating superior performance on benchmark datasets [10].

Protocol: Inferring a GRN from scRNA-seq Data with GRLGRN

Objective: To reconstruct a gene regulatory network from a single-cell RNA-sequencing dataset using the GRLGRN model.

Materials:

- scRNA-seq Dataset: A gene expression matrix (rows: cells, columns: genes) in a standard format (e.g., CSV, H5AD).

- Prior GRN (optional): A graph of known regulatory interactions in a compatible format (e.g., edge list).

- Computational Environment: Python with libraries like PyTorch and PyTorch Geometric.

Method:

- Data Preprocessing:

- Filter the scRNA-seq matrix to include only highly variable genes.

- Normalize the expression data (e.g., library size normalization and log-transformation).

- If a prior GRN is available, format it as a directed adjacency matrix.

- Model Setup and Training:

- Install the GRLGRN package or implement the architecture as described [10].

- The model's gene embedding module uses a graph transformer to extract implicit links from the prior network and a Graph Convolutional Network (GCN) to generate gene embeddings.

- The feature enhancement module applies a Convolutional Block Attention Module (CBAM) to refine these embeddings.

- The output module scores potential regulatory edges between genes.

- Train the model using the preprocessed expression data and prior network, optimizing the loss function which includes a graph contrastive learning regularization term to prevent over-smoothing.

- Network Inference and Validation:

- Run the trained model to obtain a ranked list of potential regulatory edges.

- Compare the inferred network against a ground-truth network (if available) using metrics like Area Under the Receiver Operating Characteristic curve (AUROC) and Area Under the Precision-Recall Curve (AUPRC) [10].

Expected Outcomes: The output is a predicted GRN with weighted edges representing the confidence of each regulatory interaction. This network can be visualized and analyzed to identify hub genes and key regulatory modules.

Table 2: Selected Computational Tools for GRN Inference

| Tool | Learning Type | Key Technology | Input Data |

|---|---|---|---|

| GENIE3 | Supervised | Random Forest | Bulk RNA-seq |

| GRN-VAE | Unsupervised | Variational Autoencoder | Single-cell RNA-seq |

| STGRNS | Supervised | Transformer | Single-cell RNA-seq |

| GRLGRN | Supervised | Graph Transformer + GCN | scRNA-seq + Prior GRN |

| GCLink | Contrastive | Graph Contrastive Learning | Single-cell RNA-seq |

The following workflow diagram summarizes the computational inference process:

Figure 2: Computational GRN Inference Workflow. This diagram outlines the key steps in inferring a gene regulatory network from single-cell RNA-seq data using a deep learning model like GRLGRN.

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Research Reagents for GRN Analysis

| Reagent / Material | Function in GRN Research |

|---|---|

| Morpholino Oligonucleotides | Gene-specific knockdown tools to inhibit mRNA translation or splicing, enabling functional perturbation of network nodes. |

| CRISPR/Cas9 Components | For targeted gene knockouts or edits in the genome to study the function of specific transcription factors or cis-regulatory modules. |

| cDNA/mRNA for Microinjection | Tools for gene overexpression to test for sufficiency in activating downstream network components. |

| In Situ Hybridization Kits | For spatial localization of mRNA transcripts, allowing visualization of gene expression patterns in wild-type and perturbed embryos. |

| ChIP-seq and ATAC-seq Kits | To map transcription factor binding sites (ChIP-seq) and open chromatin regions (ATAC-seq), identifying physical DNA-protein interactions. |

| scRNA-seq Library Prep Kits | To generate transcriptome-wide gene expression data from individual cells, providing the primary data for computational network inference. |

| Specific Antibodies | For protein detection and localization (immunohistochemistry) and for chromatin immunoprecipitation (ChIP). |

The comparative analysis of gene regulatory networks, from model organisms like the sea urchin to humans, provides a powerful framework for understanding the evolutionary principles of developmental programming. The sea urchin endomesoderm GRN demonstrates how the precise execution of logical operations encoded in the genome directs the formation of a complex organism. The evolutionary rearrangement of its mitochondrial genome alongside the conservation of core signaling pathways and network motifs highlights the dual processes of change and constraint that shape biological systems.

The integration of detailed experimental models, like the sea urchin GRN, with advanced computational inference methods creates a virtuous cycle. Biological discoveries provide ground-truthed benchmarks for validating and improving algorithms, while computational tools enable the exploration of network properties and the prediction of new interactions at scale. This synergistic approach, leveraging both established model organisms and cutting-edge technology, continues to shed light on the fundamental architecture of life, with profound implications for understanding human development, health, and disease.

From Data to Networks: Modern Computational Methods for GRN Inference in Developmental Biology

In developmental biology, a central goal is to understand the precise gene regulatory networks (GRNs) that dictate cell fate decisions, differentiation, and morphogenesis. Gene regulatory networks describe the complex interplay between transcription factors (TFs) and their target genes [29]. Traditional bulk sequencing methods average signals across thousands of cells, obscuring the cellular heterogeneity that is fundamental to developmental processes. The advent of single-cell sequencing technologies has revolutionized our capacity to deconstruct this heterogeneity, providing high-resolution maps of the transcriptome (scRNA-seq) and epigenome, notably chromatin accessibility (scATAC-seq), across individual cells within a tissue [30] [31].

While powerful alone, these modalities are most informative when integrated. scRNA-seq reveals the expression levels of genes, including potential TFs, while scATAC-seq identifies accessible chromatin regions, which often denote active regulatory elements like promoters and enhancers [29]. The integration of scRNA-seq and scATAC-seq enables the inference of context-specific GRNs by linking the activity of a regulatory element (from scATAC-seq) to the expression of a potential target gene (from scRNA-seq), thereby uncovering the mechanistic drivers of developmental pathways [29] [32]. This Application Note details the protocols and analytical frameworks for integrating single-cell multi-omics data to reconstruct predictive GRNs, with a specific focus on applications in developmental research.

Computational Integration Strategies and Benchmarking

A significant challenge in single-cell multi-omics is the computational integration of data from different molecular layers, which inherently reside in distinct feature spaces (e.g., genomic regions for ATAC-seq vs. genes for RNA-seq) [32]. Several computational strategies have been developed to address this, which can be broadly categorized as follows.

- Feature Conversion Methods: This straightforward approach converts one modality into the feature space of another using prior biological knowledge. For example, scATAC-seq data is often linked to genes by associating accessible chromatin peaks with the promoters or gene bodies of nearby genes, after which single-omics integration tools can be applied [32]. While simple, this method can lead to information loss and is highly dependent on the accuracy of the prior knowledge [32].

- Manifold Alignment Methods: These methods aim to find a shared, low-dimensional representation (manifold) of cells from different omics layers without explicit feature conversion. They typically rely on the assumption that the underlying cellular state is consistent across modalities [32].

- Graph-Linked Unified Embedding: A more recent and powerful approach is exemplified by GLUE (Graph-Linked Unified Embedding), which uses a knowledge-based "guidance graph" to explicitly model regulatory interactions between different omics layers during the integration process [32]. For instance, vertices in the graph can represent genes (from scRNA-seq) and accessible chromatin regions (from scATAC-seq), with edges connecting regions to their putative target genes. This biologically intuitive framework has demonstrated superior performance in terms of accuracy, robustness, and scalability [32].

Systematic benchmarking of these methods is crucial for selection. A comprehensive evaluation using gold-standard datasets from simultaneous scRNA-seq and scATAC-seq profiling technologies (e.g., SNARE-seq, SHARE-seq) has shown that methods like GLUE achieve a high level of biological conservation and omics mixing, while also minimizing single-cell level alignment errors [32]. Furthermore, methods based on graph-linked embedding or those that aggregate cells within biological replicates to form 'pseudobulks' have shown high concordance with ground truth data and robustness to inaccuracies in prior regulatory knowledge [32] [33].

Table 1: Benchmarking of Single-Cell Multi-omics Integration Methods

| Method | Underlying Principle | Key Advantage(s) | Reported Performance |

|---|---|---|---|

| GLUE [32] | Graph-linked unified embedding | Explicitly models regulatory interactions; highly accurate, robust, and scalable. | Highest overall score in benchmarking; lowest single-cell alignment error. |

| Seurat v3 [29] | Canonical Correlation Analysis (CCA) | Provides a framework for integrating different data types; output is an integrated matrix for downstream analysis. | Widely adopted; produces an integrated expression matrix for any GRN inference method. |

| Coupled NMF [29] | Coupled Matrix Factorization | Provides a framework for integrating different data types; assumes linear predictability. | Quick convergence but no established convergence properties. |

| LinkedSOMs [29] | Self-Organizing Maps (SOM) | Provides a framework for integration of different types of data. | SOM may spend a long time to converge. |

Detailed Protocol for GRN Inference via Multi-omics Integration

This protocol outlines the primary steps for inferring gene regulatory networks from unpaired scRNA-seq and scATAC-seq data using a graph-linked embedding approach, which has been benchmarked for its high performance.

Data Preprocessing and Feature Selection

- scRNA-seq Processing: Begin with standard processing of the scRNA-seq count matrix. This includes quality control (filtering cells by mitochondrial read percentage and unique gene counts), normalization, and identification of highly variable genes. Dimensionality reduction (e.g., PCA) is then performed.

- scATAC-seq Processing: Process the scATAC-seq fragment file or count matrix. Perform quality control based on metrics like transcription start site (TSS) enrichment and total fragments per cell. Call peaks using a method like MACS2 and create a cell-by-peak binary accessibility matrix. Term Frequency-Inverse Document Frequency (TF-IDF) normalization is commonly applied.

- Feature Selection: For scRNA-seq, retain the top highly variable genes. For scATAC-seq, retain the top accessible peaks, often filtering for those that occur in a minimum fraction of cells. These selected features form the basis for the subsequent integration.

Construction of the Guidance Graph

The guidance graph formalizes prior knowledge of regulatory interactions and is a cornerstone of the GLUE methodology [32].

- Define Graph Vertices: Create two sets of vertices: one representing genes from the scRNA-seq data and another representing peaks from the scATAC-seq data.

- Define Graph Edges: Connect peaks to genes based on genomic proximity and other evidence. A standard schema is to link a peak to a gene if it overlaps the gene's promoter (e.g., ± 2 kb from the transcription start site) or is within the gene body. To increase biological accuracy, edges can be weighted or signed (e.g., positive for enhancer links, negative for repressive interactions like those from gene body DNA methylation) [32]. Motif information can also be incorporated to connect peaks containing a TF binding motif to the gene encoding that TF.

Multi-omics Data Integration and Model Training

- Model Configuration: Configure the GLUE model (or a similar graph-based integration tool) using the preprocessed scRNA-seq and scATAC-seq data and the constructed guidance graph. Each omics layer is equipped with a separate variational autoencoder designed for its specific feature space.

- Iterative Alignment: Train the model using adversarial alignment. This iterative procedure aligns the cell embeddings from the different omics layers, guided by the feature embeddings derived from the guidance graph. The process converges when the model can no longer distinguish the omics layer of origin based on the cell embeddings, indicating successful integration [32].

- Batch Effect Correction: If batch effects are present within or between omics layers, include batch as a decoder covariate during model training to correct for these technical confounders [32].

Graphical Workflow for Multi-omics GRN Inference

Regulatory Inference and Network Analysis

- Refine Regulatory Interactions: Upon convergence, the guidance graph can be refined using the integrated data, enabling data-oriented regulatory inference. The model can prioritize regulatory links that are strongly supported by the coordinated patterns of accessibility and expression in the data.

- Define Regulatory Modules: Within the integrated low-dimensional space, identify clusters of cells representing distinct developmental states. For each state, extract the TF-peak-gene interactions that are most active, thereby defining context-specific GRNs.

- Validation: Experimentally validate key inferred regulatory interactions using techniques like Perturb-seq (CRISPR-based knockout combined with scRNA-seq) [29] or through functional assays in model systems.

The Scientist's Toolkit: Essential Reagents and Computational Tools

Table 2: Key Research Reagent Solutions for Single-Cell Multi-omics

| Item/Category | Function/Purpose | Examples / Notes |

|---|---|---|

| 10X Genomics Multiome Kit | Enables simultaneous scRNA-seq and scATAC-seq profiling from the same single cell. | Provides paired data from the same cell, simplifying integration but requiring specialized library preparation [32]. |

| SNARE-seq / SHARE-seq | Alternative methods for simultaneous profiling of the epigenome and transcriptome. | Used to generate gold-standard benchmarking datasets for integration algorithms [32]. |

| Perturb-seq | Combers CRISPR-mediated gene inactivation with scRNA-seq. | Essential for reverse genetics and functional validation of inferred GRNs by perturbing selected TFs [29]. |

| Cell Barcoding | Labels DNA/RNA molecules from single cells with unique barcodes to track cell-of-origin after pooling. | A crucial step in all high-throughput single-cell workflows (e.g., 10X Chromium) [31]. |

| Motif Databases | Collections of transcription factor binding motifs. | Used to connect accessible chromatin regions (from scATAC-seq) to potential regulating TFs (from scRNA-seq) [34]. |

Table 3: Essential Computational Tools and Packages

| Tool/Package | Primary Function | Application in Protocol |

|---|---|---|

| GLUE [32] | Unpaired multi-omics data integration and regulatory inference. | Core algorithm for integrating scRNA-seq and scATAC-seq data using a guidance graph (Section 3.2, 3.3). |

| FigR [34] | Functional inference of gene regulation using single-cell multi-omics. | Used for linking TFs to target genes via dynamic OCRs to map GRNs in a cell-type-specific manner. |

| Seurat [29] | A comprehensive toolkit for single-cell genomics. | Often used for preprocessing, analysis, and visualization of scRNA-seq data; includes some multi-omics integration functions. |

| Signac | An extension of Seurat for the analysis of single-cell epigenomic data. | Used for processing and analyzing scATAC-seq data, including peak calling, quantification, and chromatin motif analysis. |

| SCENIC [29] | GRN inference from scRNA-seq data. | Can be applied post-integration to the imputed or integrated expression matrix to infer GRNs and identify regulons. |

Concluding Remarks

The integration of scRNA-seq and scATAC-seq represents a paradigm shift in our ability to infer the context-specific gene regulatory networks that orchestrate development. By moving beyond correlative observations to mechanistic, multi-layered models, researchers can now pinpoint the key transcriptional regulators and cis-regulatory elements active in specific cell states along a developmental trajectory. The protocols and tools outlined here provide a robust framework for conducting such analyses. As the field progresses, the incorporation of additional omics layers, such as DNA methylation and proteomics, alongside spatial information, will further refine our understanding of the regulatory logic governing development and disease, opening new avenues for therapeutic intervention.

Gene Regulatory Networks (GRNs) are intricate biological systems that control gene expression and regulation in response to environmental and developmental cues [35]. Representing the complex web of interactions between transcription factors (TFs) and their target genes, GRNs encode the logical framework of cellular behavior, development, and pathological states [36]. The ultimate goal of gene network inference is to uncover the regulatory biology of a particular system, often as it relates to developmental processes or pathological phenotypes, enabling researchers to distill relatively simple insights from the immense complexity of biological systems [37].

Advancements in computational biology, coupled with high-throughput sequencing technologies, have significantly improved the accuracy of GRN inference and modeling [35]. Modern approaches increasingly leverage artificial intelligence (AI), particularly machine learning techniques—including supervised, unsupervised, semi-supervised, and contrastive learning—to analyze large-scale omics data and uncover regulatory gene interactions [35]. Machine learning provides a robust framework for analyzing questions using complex data in biological research, with algorithms now standard for conducting cutting-edge research across disciplines within biological sciences [38]. These computational methodologies have become particularly crucial as new datasets emerge, existing datasets increase in size, and computational technologies improve [38].

Table 1: Key Categories of Machine Learning Methods for GRN Inference

| Method Category | Key Algorithms | Primary Applications in GRN Inference |

|---|---|---|

| Tree-Based Methods | GENIE3, GRNBoost2, Random Forests | Initial co-expression module identification, feature importance ranking |

| Deep Learning Architectures | DeepSEM, DAZZLE, EnsembleRegNet, CNN-LSTM hybrids | Modeling non-linear relationships, handling single-cell data sparsity |

| Hybrid Approaches | CNN + Machine Learning integrations | Combining feature learning capabilities with classification strength |

| Network Inference Frameworks | SCENIC, PIDC, ARACNE | Regulatory network reconstruction from expression data |

Evolution of Machine Learning Approaches for GRN Analysis

Traditional Machine Learning Foundations