Advanced Statistical Tests for Rare Variants: Mastering Mixed Models for Powerful Genetic Association Studies

This article provides a comprehensive guide to advanced statistical methods for rare variant association studies, with a focus on mixed-effects models that control for complex sample relatedness and case-control imbalance.

Advanced Statistical Tests for Rare Variants: Mastering Mixed Models for Powerful Genetic Association Studies

Abstract

This article provides a comprehensive guide to advanced statistical methods for rare variant association studies, with a focus on mixed-effects models that control for complex sample relatedness and case-control imbalance. We explore the foundational principles of rare variant tests, detail cutting-edge methodologies like Meta-SAIGE for scalable meta-analysis, and address critical troubleshooting areas such as type I error inflation and effect size estimation bias. Through validation and comparative analysis, we benchmark the performance of different tests against real-world data from biobanks. This resource is tailored for researchers, scientists, and drug development professionals seeking to enhance the power and accuracy of their genomic discoveries.

The Landscape of Rare Variant Analysis: From Single-Variant Tests to Advanced Aggregation

Statistical Methodology FAQs

What are the main classes of rare variant association tests and when should I use them?

The two primary classes are burden tests and variance-component tests, each with distinct strengths and assumptions.

- Burden Tests: These tests collapse rare variants within a region (e.g., a gene) into a single aggregate score or "burden" for each individual. They operate on the assumption that most rare variants in the set are causal and influence the trait in the same direction (e.g., all deleterious). They are powerful when this assumption holds true but can lose power substantially if both risk-increasing and protective variants are present in the same gene or if a large proportion of the variants are non-causal [1] [2].

- Variance-Component Tests (e.g., SKAT): Instead of collapsing, these tests model the variant effects as random, allowing for different magnitudes and directions of effect. The Sequence Kernel Association Test (SKAT) is a popular variance-component test that is robust to the presence of non-causal variants and mixed effect directions, making it powerful in scenarios of heterogeneity [1] [3] [2].

- Combined Tests (e.g., SKAT-O): This approach is a weighted combination of burden and SKAT tests, aiming to be robust across a wider range of scenarios. It adaptively selects the best weighting between the two strategies [1] [4].

Table 1: Comparison of Primary Rare Variant Association Tests

| Test Type | Core Principle | Optimal Use Case | Key Advantage | Key Limitation |

|---|---|---|---|---|

| Burden Test [1] [2] | Collapses variants into a single burden score | High proportion of causal variants with homogeneous effects | High power when its directional assumption is met | Power loss with effect heterogeneity or non-causal variants |

| Variance-Component (SKAT) [1] [3] | Models variant effects as random from a distribution | Presence of non-causal variants or mixed effect directions | Robust to heterogeneity and mixed effect signs | Less powerful than burden tests under homogeneous effects |

| Combined (SKAT-O) [1] [4] | Optimally combines burden and SKAT | Unknown or mixed genetic architecture | Robust across diverse scenarios | Slightly less powerful than the "correct" pure test in clear scenarios |

How can hierarchical modeling improve my rare variant analysis?

Hierarchical modeling offers a powerful and flexible framework that can incorporate variant-level functional annotations to boost power and provide deeper biological insights. In this model, the effect of each variant is not estimated independently but is considered a random variable. The mean of this distribution can be modeled as a function of known variant characteristics (e.g., whether it is missense, nonsense, or resides in a specific functional domain), while the variance component accounts for residual heterogeneity not explained by these characteristics [1].

This approach provides a unified testing framework where you can simultaneously test:

- The Group Effect: Whether the variant characteristics (e.g., a specific functional impact) are associated with the phenotype.

- The Heterogeneity Effect: Whether there is significant residual variance in variant effects, indicating effects not captured by the included annotations [1].

This method not only enhances power by leveraging prior biological knowledge but also helps identify which aspects of variant functionality contribute to the association, moving beyond mere detection towards interpretation [1].

Experimental Design & Workflow Troubleshooting

What is a standard workflow for a rare variant association study?

A robust rare variant analysis involves a multi-step process from study design through to interpretation. The following workflow outlines the key stages and decision points.

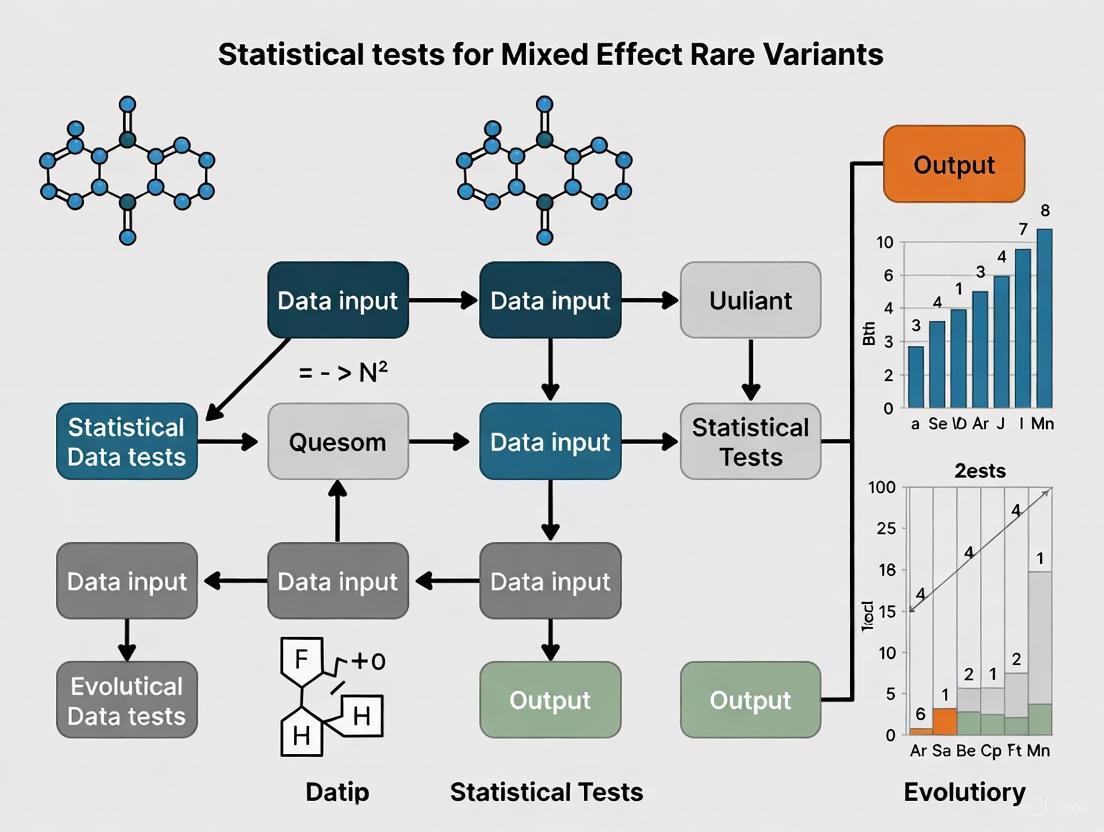

Figure 1: Rare Variant Analysis Workflow

How do I choose the right sequencing design for my study budget and goals?

The choice of sequencing strategy is a critical initial decision that balances cost, scope, and data quality. The table below summarizes the most common designs.

Table 2: Comparison of Sequencing Study Designs for Rare Variants

| Design | Key Advantage | Key Disadvantage | Ideal Use Case |

|---|---|---|---|

| High-depth WGS [5] | Identifies nearly all variants genome-wide with high confidence | Very expensive; generates massive data | Ultimate variant discovery; large, well-funded projects |

| Whole-Exome Sequencing (WES) [5] [2] | Focuses on protein-coding regions; cost-effective vs. WGS | Limited to exome; misses non-coding variants | Agnostically screening coding regions for disease links |

| Low-depth WGS [5] | Cost-effective for covering a larger sample size | Lower accuracy for rare variant calling; relies on imputation | Large-scale association mapping where sample size > depth |

| Targeted Sequencing [5] | Very cost-effective; ultra-deep coverage of specific regions | Limited to pre-specified genomic regions | Deep sequencing of candidate genes or pathways |

| Exome Chip (Array) [5] | Very cheap for large samples; pre-designed content | Limited to previously identified variants; poor for very rare variants | High-throughput genotyping in very large biobanks |

My analysis has insufficient power. What are my options?

Low power is a fundamental challenge in rare variant studies. Here are several strategies to address it:

- Increase Sample Size via Meta-Analysis: Combining summary statistics from multiple cohorts through meta-analysis is one of the most effective ways to boost power. Methods like Meta-SAIGE are specifically designed for this purpose, controlling for type I error even in low-prevalence traits and allowing the discovery of associations not significant in any single cohort [4].

- Utilize External Controls: In case-control studies, supplementing your control group with pre-existing, carefully matched sequencing data from public resources can increase sample size and power, though it requires rigorous handling of population stratification [2].

- Employ Extreme Phenotype Sampling: Enriching your study sample with individuals at the extreme ends of a phenotypic distribution (e.g., the most severe cases and the healthiest controls) can be a cost-effective way to increase the relative frequency of causal rare variants in your case group [5].

- Leverage Functional Annotations: Use prior biological knowledge to up-weight variants more likely to be functional (e.g., missense or loss-of-function variants) and down-weight or filter out others. This increases the signal-to-noise ratio in your variant set [1] [2] [6].

- Explore Advanced Methods: Consider newer, data-driven methods like DeepRVAT, which uses deep learning to learn a nonlinear variant aggregation function from functional annotations, potentially offering power gains over traditional methods with pre-specified weights [6].

Technical & Computational Troubleshooting

My rare variant meta-analysis shows inflated type I error. How can I fix this?

Type I error inflation is a known issue in rare variant meta-analysis, especially for binary traits with unbalanced case-control ratios. Standard meta-analysis methods can be highly inflated. The solution is to use methods that implement advanced statistical corrections:

- Use Saddlepoint Approximation (SPA): Methods like Meta-SAIGE apply a two-level SPA to accurately estimate the tails of the null distribution. This includes SPA on per-cohort score statistics and a genotype-count-based SPA on the combined meta-analysis statistics, which has been shown to effectively control type I error even for traits with prevalence as low as 1% [4].

- Collapse Ultra-Rare Variants: For variants with extremely low minor allele count (e.g., MAC < 10), collapsing them into a single group within a gene before testing can improve both type I error control and computational efficiency [4].

My gene-based test yielded a significant result, but I need to identify the driving variants. What's next?

A significant gene-based test is a starting point, not an endpoint. Follow-up should include:

- Inspect Single-Variant Associations: Examine the individual variant test statistics and p-values within the significant gene. While they may not survive multiple testing correction on their own, variants with consistently low p-values are strong candidates.

- Review Functional Annotation: Prioritize variants with high-impact predictions (e.g., nonsense, splice-site, missense with a high CADD score). The hierarchical modeling framework is particularly useful here as it can directly incorporate this information [1] [6].

- Replicate in an Independent Cohort: The strongest evidence for a specific variant is independent replication of its effect in a separate dataset.

- Conduct Functional Validation: In a laboratory setting, use techniques like genome editing (e.g., CRISPR) in model systems to directly test the phenotypic impact of the candidate variant.

The Scientist's Toolkit: Key Research Reagents & Solutions

Table 3: Essential Resources for Rare Variant Analysis

| Tool / Resource | Category | Primary Function | Example / Note |

|---|---|---|---|

| SAIGE/SAIGE-GENE+ [4] | Software | Association testing for binary traits & rare variants | Accounts for case-control imbalance & sample relatedness. |

| SKAT/SKAT-O [1] [3] [2] | Software | Variance-component & omnibus rare variant tests | Robust to mixed effect directions; widely used. |

| Meta-SAIGE [4] | Software | Rare variant meta-analysis | Controls type I error; reuses LD matrices for efficiency. |

| DeepRVAT [6] | Software | Deep learning-based burden score | Learns data-driven variant aggregation; models interactions. |

| ANNOVAR | Bioinformatics | Functional variant annotation | Critical for assigning consequence (missense, LoF, etc.). |

| UK Biobank [4] [6] | Data Resource | Large-scale cohort with WES/WGS & phenotypes | Provides massive sample sizes for powerful discovery. |

| All of Us [4] | Data Resource | Diverse cohort with genomic & health data | Enables meta-analysis and diverse population studies. |

| Beta Density Weights [1] [3] | Statistical Method | Weighting variants by MAF | Up-weights rarer variants (e.g., Beta(1,25) density). |

| Saddlepoint Approximation (SPA) [4] | Statistical Method | Accurate p-value calculation | Corrects for inflation in rare variant & binary trait tests. |

FAQ: Core Concepts and Application

Q1: What is the fundamental difference between Burden tests and SKAT?

Burden tests and SKAT represent two different philosophical approaches to rare variant aggregation. Burden tests operate on the principle that multiple rare variants within a gene collectively impact a trait, assuming all variants have the same direction of effect (all harmful or all protective) and similar effect sizes. They collapse variant information into a single burden score for each individual, which is then tested for association. In contrast, SKAT (Sequence Kernel Association Test) is a variance-component test that models the effects of individual variants as random, allowing for both positive and negative effects within the same gene region. It aggregates score statistics across variants without requiring a consistent direction of effect, making it more robust when variants have bidirectional influences on the trait [7] [8].

Q2: When should I use SKAT-O over Burden or SKAT?

SKAT-O (Optimal SKAT) is a hybrid test that optimally combines the Burden test and SKAT to provide a robust approach regardless of the underlying genetic architecture. You should prefer SKAT-O when you lack prior knowledge about the proportion of causal variants in your gene set and their direction of effects. If the gene contains a high proportion of causal variants with effects in the same direction, SKAT-O will perform similarly to the Burden test. If the causal variants are sparse or have mixed effects, it will perform like SKAT. This adaptability makes SKAT-O a powerful default choice for gene-based association testing [7] [4].

Q3: How do I handle relatedness and case-control imbalance in these tests?

Sample relatedness and unbalanced case-control ratios are common challenges that can inflate Type I error if not properly addressed. SAIGE-GENE and similar advanced frameworks utilize Generalized Linear Mixed Models (GLMMs) to account for sample relatedness by incorporating a genetic relationship matrix (GRM). For case-control imbalance, particularly with low-prevalence binary traits, saddlepoint approximation (SPA) methods are employed to accurately calibrate P-values. For example, the Meta-SAIGE method applies a two-level SPA, including a genotype-count-based SPA for combined score statistics in meta-analysis, to effectively control Type I error rates even for traits with prevalence as low as 1% [4] [9].

Q4: What are the key considerations for preparing genetic data for aggregation tests?

Proper data preparation is crucial for valid results. Key steps include:

- Variant Filtering: Define appropriate minor allele frequency (MAF) cutoffs (e.g., <0.01 for rare variants) and consider functional annotations.

- Quality Control: Remove variants with differential missingness between cases and controls, as this can introduce bias.

- Annotation: Utilize functional predictions (e.g., CADD scores) to prioritize potentially deleterious variants.

- Gene/Mask Definition: Clearly define the genetic regions (genes, pathways) or variant masks (e.g., loss-of-function only) to be tested.

- Covariate Adjustment: Include relevant covariates such as principal components to account for population stratification [8] [9].

Troubleshooting Common Experimental Issues

Problem: Inflated Type I Error in Binary Traits with Low Prevalence

- Symptoms: P-value distributions show inflation (lambda >1), leading to false positive associations.

- Solution: Implement methods with enhanced statistical calibration. Use SAIGE-GENE or Meta-SAIGE, which apply saddlepoint approximation (SPA) to accurately estimate the null distribution. For meta-analyses, ensure the method uses GC-based SPA (Genotype-Count SPA) to control error rates effectively [4].

Problem: Computational Bottlenecks in Large-Scale Biobank Analysis

- Symptoms: Analysis runs are prohibitively slow or fail due to memory constraints.

- Solution:

- Utilize efficient LD matrix handling. Meta-SAIGE allows reuse of a single sparse LD matrix across all phenotypes, significantly reducing computational load.

- Employ ultrarare variant collapsing (e.g., for MAC < 10) to reduce dimensionality without sacrificing power.

- Leverage pre-computed genetic relationship matrices (GRMs) and null model fitting that can be reused for multiple gene tests [4] [9].

Problem: Interpretation of "Significance" for Genes with Mixed Effect Directions

- Symptoms: A gene shows a significant association with SKAT-O, but individual variant effects appear to go in both directions.

- Solution: This is a expected scenario where SKAT-O excels. The significance indicates that the collective set of variants in the gene is associated with the trait, even without uniform effect direction. Investigate further by examining variant-level functional annotations and consulting databases of known gene function to understand potential mechanisms [8].

Statistical Test Comparison Tables

Table 1: Comparison of Core Aggregate Test Methods

| Feature | Burden Test | SKAT | SKAT-O |

|---|---|---|---|

| Core Assumption | All variants have same effect direction | Variant effects can be bidirectional | Adapts to the underlying architecture |

| Effect Modeling | Fixed effects model | Random effects model | Combined fixed and random effects |

| Power Strength | High when most variants are causal with same effect direction | High when variants have mixed or bidirectional effects | Robust across various scenarios |

| Key Limitation | Power loss with non-causal variants or mixed effects | May lose power when all effects are in same direction | Computationally more intensive than individual tests |

| Handles Relatedness | Yes (via SAIGE-GENE, REGENIE) | Yes (via SAIGE-GENE) | Yes (via SAIGE-GENE) [7] [4] [9] |

Table 2: Software Implementation and Data Requirements

| Tool / Package | Implemented Tests | Handles Relatedness? | Handles Case-Control Imbalance? | Key Application Context |

|---|---|---|---|---|

| SKAT R Package | Burden, SKAT, SKAT-O | Yes (via kinship matrix) | Yes (for binary traits) | General rare variant association studies [7] |

| SAIGE-GENE | SKAT-O, Burden, SKAT | Yes (via GRM) | Yes (uses SPA) | Large biobanks with related individuals [4] [9] |

| REGENIE | Burden test | Yes | Yes | Genome-wide analyses in large cohorts [9] |

| Meta-SAIGE | Burden, SKAT, SKAT-O | Yes (summary-level) | Yes (SPA-GC adjustment) | Cross-cohort rare variant meta-analysis [4] |

| Rvtests (in AVT) | Fisher's test (collapsing) | No (requires unrelated samples) | Yes | Coherent ancestral backgrounds, quick collapsing [9] |

Methodologies and Workflows

Experimental Protocol: Gene-Based Association Analysis Using SAIGE-GENE

The following workflow is adapted from the Aggregate Variant Testing (AVT) pipeline and SAIGE-GENE documentation [9]:

Input Preparation:

- Genotype Data: Convert to BGEN format and index files.

- Phenotype and Covariate Files: Prepare files in tab-delimited format.

- Genetic Relationship Matrix (GRM): Calculate GRM from high-quality, common autosomal SNPs to account for population structure and relatedness.

Null Model Fitting:

- Run

SAIGE_FIT_NULLGLMMto fit the null generalized linear mixed model (GLMM) including covariates (e.g., age, sex, principal components). This step does not use the gene-based grouping and is performed once per phenotype. The resulting null model is used in the subsequent association testing.

- Run

Variant Annotation and Grouping:

- Annotation: Use tools like VEP or ANNOVAR to functionally annotate all variants.

- Group File Creation: Define groups of variants for testing (e.g., by gene). The

CREATE_GROUP_FILESstep generates files specifying which variants belong to which gene, potentially incorporating functional filters (e.g., MAF < 0.01, missense only).

Association Testing:

- Execute

SAIGE_RUN_SPA_TESTSfor each gene/region. This step performs:- Burden Test: Aggregates qualified variants into a single score.

- SKAT: Tests for variance components across variants.

- SKAT-O: Calculates the optimal linear combination of Burden and SKAT statistics.

- Execute

Result Aggregation and Multiple Testing Correction:

- Aggregate summary statistics (

SAIGE_AGGREGATE_SUMMARY_STATISTICS) across all tested genes. - Apply exome-wide significance threshold (e.g., P < 2.5 × 10⁻⁶) to account for multiple testing.

- Aggregate summary statistics (

SAIGE-GENE Analysis Workflow

Meta-Analysis Protocol for Rare Variants with Meta-SAIGE

For combining results across multiple cohorts, Meta-SAIGE provides a scalable approach [4]:

Per-Cohort Summary Statistics:

- Each participating cohort runs SAIGE to generate per-variant score statistics (S), their variances, and accurate P-values (using SPA for binary traits). Each cohort also calculates a sparse LD matrix (Ω) for the genetic regions tested.

Summary Statistics Consolidation:

- Score statistics from all cohorts are combined into a single superset. For binary traits, the variance of each score statistic is recalculated by inverting the SPA-corrected P-value to improve error control.

Gene-Based Meta-Analysis:

- Meta-SAIGE performs Burden, SKAT, and SKAT-O tests on the combined summary statistics, using the combined covariance matrix. It can handle different functional annotations and MAF cutoffs.

P-Value Combination:

- The Cauchy combination method is applied to combine P-values from tests with different functional annotations and MAF cutoffs for each gene, producing a final meta-analysis P-value.

Rare Variant Meta-Analysis Workflow

Table 3: Key Software and Data Resources for Aggregate Testing

| Resource Name | Type | Primary Function | Application Note |

|---|---|---|---|

| SKAT R Package [7] | Software | Performs Burden, SKAT, and SKAT-O tests. | Core tool for general rare variant association studies; allows kinship adjustment. |

| SAIGE / SAIGE-GENE [4] [9] | Software | Scalable implementation of mixed model-based tests. | Essential for large biobanks with related individuals and unbalanced case-control ratios. |

| Meta-SAIGE [4] | Software | Rare variant meta-analysis method. | Extends SAIGE for cross-cohort analysis; reuses LD matrices for computational efficiency. |

| Genetic Relationship Matrix (GRM) [4] [9] | Data/Matrix | Quantifies genetic relatedness between samples. | Crucial covariate in mixed models to control for population stratification and relatedness. |

| Variant Functional Annotations [8] [9] | Data | Predicts functional impact of variants (e.g., CADD, LOFTEE). | Used to create biologically informed variant masks for burden tests. |

| Pre-computed LD Matrices [4] | Data/Matrix | Stores linkage disequilibrium (correlation) between variants. | Used by meta-analysis tools like Meta-SAIGE to avoid re-computation for each phenotype. |

Frequently Asked Questions

1. What is the primary purpose of aggregating rare variants in genetic association studies? Due to the extreme low frequencies of rare variants, aggregating them into a prior-defined set (e.g., a gene or pathway) is necessary to achieve adequate statistical power for detecting associations with phenotypes. Single-variant tests are typically underpowered for rare variants because very few individuals carry the variant alleles [1].

2. What are the main types of tests for aggregated rare variants, and when should I use each? The two principal approaches are burden tests and variance component tests (like SKAT). The choice depends on the underlying genetic architecture:

- Burden Tests: Use these when a large proportion of the rare variants in your set are causal and their effects are predominantly in the same direction (e.g., all deleterious). They collapse variants into a single score and are powerful when this assumption holds [1] [4].

- Variance Component Tests (e.g., SKAT): Use these when there is heterogeneity in the variant effects, such as when only a small subset of variants is causal, or when both risk-increasing and protective variants are present in the same set. These tests are typically more powerful than burden tests in these scenarios [1] [4].

3. My meta-analysis of binary traits with low prevalence shows inflated type I error. What is the cause and solution? Inflation of type I error is a known challenge in meta-analysis of rare variants for low-prevalence binary traits, often due to case-control imbalance. Traditional methods can have markedly inflated error rates. A solution is to use methods that employ saddlepoint approximations (SPA), such as Meta-SAIGE, which applies a two-level SPA (including a genotype-count-based SPA for combined statistics) to accurately estimate the null distribution and effectively control type I error [4].

4. Are there methods that combine the advantages of both burden and variance component tests? Yes, unified or hybrid methods have been developed. For example, the SKAT-O test is a weighted linear combination of a burden test and the SKAT variance component test. Furthermore, hierarchical model-based tests jointly evaluate group-level effects (similar to burden tests) and variant-specific heterogeneity effects (similar to SKAT), providing a robust test across a wider range of scenarios [1].

5. How can I improve computational efficiency in a phenome-wide rare variant meta-analysis? A significant computational bottleneck is the handling of linkage disequilibrium (LD) matrices. To boost efficiency, you can use methods that reuse a single, sparse LD matrix across all phenotypes. This strategy avoids the need to construct separate, phenotype-specific LD matrices for each trait, drastically reducing computational load and storage requirements [4].

Troubleshooting Guides

Problem: Low Statistical Power in Rare Variant Association Analysis

Issue: Your analysis fails to identify significant associations, potentially due to a suboptimal choice of test for your dataset's genetic architecture.

Investigation and Resolution Protocol:

Diagnose the Genetic Architecture:

- Action: Prior to selecting an aggregation test, conduct exploratory analyses.

- Methodology: Examine the distribution and directions of effect sizes for individual rare variants within your set (if calculable). Check for the presence of both positive and negative effects, and estimate the proportion of variants that appear to be causal.

- Expected Outcome: This step informs whether your variant set is more suited to a burden test (high proportion of causal variants with homogeneous effects) or a variance component test like SKAT (heterogeneous effects) [1].

Select and Execute an Appropriate Test:

- Action: Based on your diagnosis, apply the most powerful test.

- Methodology:

- If variants have homogeneous effects, apply a burden test (e.g., a weighted count of minor alleles) [1].

- If variants have heterogeneous effects, apply a variance component test like SKAT [1] [4].

- If the architecture is unknown, use a robust hybrid method such as SKAT-O or a hierarchical model-based test that combines both approaches [1] [4].

Validate and Meta-Analyze:

- Action: If power remains low in a single cohort, consider a meta-analysis.

- Methodology: Use a meta-analysis method like Meta-SAIGE that combines summary statistics from multiple cohorts. This method controls type I error for binary traits and has power comparable to analyzing pooled individual-level data, thus enhancing discovery potential [4].

The following workflow outlines this diagnostic and resolution process:

Problem: Inflated Type I Error in Meta-Analysis of Binary Traits

Issue: When meta-analyzing rare variant associations for a binary trait with low prevalence (e.g., 1%), the quantile-quantile plot shows genomic control lambda (λ) > 1, indicating inflation of test statistics and false positives.

Investigation and Resolution Protocol:

Confirm Case-Control Imbalance:

- Action: Calculate the case-control ratio in each cohort included in the meta-analysis.

- Methodology: For a disease with 1% prevalence, a cohort of 100,000 individuals will have only ~1,000 cases. This severe imbalance is a primary cause of inflation in score-based tests [4].

Apply Saddlepoint Approximation (SPA):

- Action: Ensure that the per-cohort summary statistics were calculated using methods that control for imbalance.

- Methodology: Use software like SAIGE that employs SPA to compute accurate per-variant P values, correcting for the skewness in the distribution of test statistics caused by case-control imbalance [4].

Implement Genotype-Count (GC)-based SPA in Meta-Analysis:

- Action: Use a meta-analysis method that applies a second layer of correction.

- Methodology: Employ Meta-SAIGE, which uses a GC-based SPA when combining score statistics from multiple cohorts. Simulations show this two-level SPA (per-cohort and meta-analysis) effectively controls type I error rates, even for traits with 1% prevalence [4].

The following table summarizes the quantitative evidence from simulations comparing meta-analysis methods:

Table 1: Empirical Type I Error Rates for Binary Traits (α = 2.5×10⁻⁶) [4]

| Method | Prevalence | Cohort Size Ratio | Type I Error Rate | Inflation Factor |

|---|---|---|---|---|

| No Adjustment | 1% | 1:1:1 | 2.12 × 10⁻⁴ | ~85x |

| SPA Adjustment Only | 1% | 1:1:1 | 5.20 × 10⁻⁶ | ~2x |

| Meta-SAIGE (SPA+GC) | 1% | 1:1:1 | 2.70 × 10⁻⁶ | ~1.1x |

| No Adjustment | 5% | 4:3:2 | 1.21 × 10⁻⁴ | ~48x |

| Meta-SAIGE (SPA+GC) | 5% | 4:3:2 | 2.90 × 10⁻⁶ | ~1.2x |

Experimental Protocols for Key Analyses

Protocol 1: Conducting a Rare Variant Meta-Analysis with Meta-SAIGE

Objective: To perform a scalable and accurate gene-based rare variant meta-analysis across multiple cohorts for multiple phenotypes, controlling for type I error.

Materials and Software:

- Cohort Datasets: K independent cohorts with individual-level genotype (e.g., WES) and phenotype data.

- Software: Meta-SAIGE.

- Computing Resources: High-performance computing cluster recommended for large datasets.

Procedure:

- Per-Cohort Preparation: Run SAIGE on each cohort to obtain per-variant score statistics (S), their variances, and association P values. Simultaneously, generate a sparse LD matrix (Ω) for the genetic regions of interest. This LD matrix is not phenotype-specific and can be reused [4].

- Combine Summary Statistics: Consolidate the per-variant score statistics from all K cohorts into a single superset. For binary traits, recalculate the variance of each score statistic by inverting the SPA-adjusted P value from Step 1. Apply the genotype-count-based SPA to the combined statistics to ensure proper type I error control [4].

- Gene-Based Testing: With the combined statistics and covariance matrix, perform gene-based rare variant tests (Burden, SKAT, SKAT-O). Collapse ultrarare variants (MAC < 10) to improve error control and power. Combine P values from different functional annotations and MAF cutoffs using the Cauchy combination method [4].

Protocol 2: Power Simulation for Testing Method Selection

Objective: To compare the statistical power of different rare variant tests (Burden, SKAT, Hybrid) under a specific genetic model before analyzing real data.

Materials and Software:

- Genotype Data: Real genotype data from a reference panel (e.g., UK Biobank).

- Simulation Software: Custom R or Python scripts, or built-in functions in tools like SAIGE/SAIGE-GENE+.

- Genetic Model Parameters: Define the proportion of causal variants, their effect sizes, and the direction of effects (all deleterious vs. mixed).

Procedure:

- Generate Null Phenotypes: Simulate phenotype data under the null hypothesis (no genetic effect) to confirm type I error is controlled.

- Generate Alternative Phenotypes: Simulate phenotype data under the alternative hypothesis, imposing your chosen genetic model onto the real genotype data.

- Apply Multiple Tests: Run the burden test, SKAT, and a hybrid method (e.g., SKAT-O, hierarchical model) on the simulated data.

- Calculate Power: For each test, calculate power as the proportion of simulation replicates where the P value exceeds the significance threshold (e.g., α < 2.5 × 10⁻⁶). The test with the highest power for your model is the best choice [1] [4].

Table 2: Statistical Power Comparison of Meta-Analysis Methods (Simulation Data) [4]

| Scenario (Effect Size) | Joint Analysis with SAIGE-GENE+ | Meta-SAIGE | Weighted Fisher's Method |

|---|---|---|---|

| Scenario A (Small) | 0.30 | 0.29 | 0.11 |

| Scenario B (Medium) | 0.65 | 0.64 | 0.28 |

| Scenario C (Large) | 0.90 | 0.90 | 0.52 |

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Software and Statistical Tools for Rare Variant Aggregation Analysis

| Tool / Reagent | Function / Purpose | Key Application Note |

|---|---|---|

| Burden Test | Aggregates rare variants into a single score. | Most powerful when a large proportion of variants are causal with effects in the same direction [1]. |

| SKAT | A variance component test for heterogeneous variant effects. | Preferred when effects are mixed or only a small subset of variants is causal [1] [4]. |

| SKAT-O | An optimized hybrid of burden and SKAT tests. | A robust default choice when the genetic architecture is unknown [1] [4]. |

| SAIGE-GENE+ | Software for gene-based rare variant tests using individual-level data. | Adjusts for sample relatedness and case-control imbalance using SPA [4]. |

| Meta-SAIGE | Software for rare variant meta-analysis. | Controls type I error for low-prevalence traits and reuses LD matrices for computational efficiency [4]. |

| Hierarchical Models | Models variant effects as a function of characteristics and residual heterogeneity. | Provides a unified testing framework that can identify the source of association [1]. |

The Role of Functional Annotation and Masking in Refining Variant Selection

Frequently Asked Questions (FAQs)

Q1: What is the fundamental difference between variant calling and functional annotation?

Variant calling identifies genetic variants from sequencing data, producing an unannotated file (typically in Variant Calling Format - VCF) containing raw variant positions and allele changes. In contrast, functional annotation predicts the potential impact of these variants on protein structure, gene expression, cellular functions, and biological processes. This critical step translates sequencing data into meaningful biological insights by mapping variants to genomic features using tools like Ensembl Variant Effect Predictor (VEP) and ANNOVAR [10].

Q2: Why is functional annotation particularly challenging for non-coding variants, and what resources can help?

The majority of human genetic variation resides in non-protein coding regions, making functional interpretation difficult because these regions lack the clear amino acid impact framework of coding variants. However, non-coding regions contain critical regulatory elements including promoters, enhancers, transcription factor binding sites, non-coding RNAs, and transposable elements [10]. Advanced resources now leverage WGS and GWAS-based analyses to annotate these regions, with regulatory element databases and tools like Hi-C providing insights into three-dimensional genome organization and long-range interactions [10].

Q3: How dramatically can different masking strategies affect association study results?

Masking strategies show astonishing variability in their outcomes. A systematic review of 234 studies catalogued 664 masks, with 78.2% of masks and 92.2% of masking strategies used in only one publication [11]. When analyzing 54 traits in 189,947 UK Biobank exomes, the number of significant associations varied tremendously—from 58 to 2,523—depending solely on the masking strategy employed [11]. Three high-profile studies analyzing the same UK Biobank exome dataset reported minimally overlapping associations (<30% shared findings) due to different masking approaches [11].

Q4: What are the key statistical considerations when choosing between single-variant and aggregation tests for rare variants?

The choice depends heavily on the underlying genetic architecture. Aggregation tests (like burden tests and SKAT) are more powerful than single-variant tests only when a substantial proportion of variants are causal [12]. Analytical calculations and simulations indicate that if you aggregate all rare protein-truncating variants (PTVs) and deleterious missense variants, aggregation tests become more powerful than single-variant tests for >55% of genes when PTVs, deleterious missense, and other missense variants have 80%, 50%, and 1% probabilities of being causal, with n=100,000 and heritability of 0.1% [12]. The performance strongly depends on the specific genetic model and the set of rare variants aggregated.

Q5: How can researchers address type I error inflation in rare variant meta-analyses for binary traits with case-control imbalance?

Meta-SAIGE addresses this challenge through a two-level saddlepoint approximation (SPA). This includes SPA on score statistics of each cohort and a genotype-count-based SPA for combined score statistics from multiple cohorts [4]. This approach effectively controls type I error rates even for low-prevalence binary traits (tested at 1% and 5% prevalence), whereas methods without proper adjustment can exhibit type I error rates nearly 100 times higher than the nominal level [4].

Troubleshooting Guides

Problem: Inconsistent Results Across Masking Strategies

Symptoms: Different masking strategies applied to the same dataset yield minimally overlapping significant associations.

Solution:

- Systematic Mask Evaluation: Empirically test multiple mask combinations to identify strategies that maximize significant associations for your specific trait type. Research indicates that strategies optimizing for low-frequency and rare variant detection can identify twice as many significant associations as "average" strategies [11].

- Standardized Mask Definitions: Implement harmonized mask definitions using Variant Effect Predictor (VEP) and dbNSFP to ensure consistency across analyses [11].

- Functional Annotation Integration: Prioritize masks that incorporate multiple functional annotation sources rather than relying on single annotations.

Table: Performance Comparison of Mask Categories

| Mask Category | Key Characteristics | Number of Significant Associations Range | Recommended Use Cases |

|---|---|---|---|

| pLoF-only | Protein-truncating variants only | Moderate | Initial screening for high-impact variants |

| pLoF + damaging missense | Combines pLoF with predicted damaging missense | High (up to 2,706 associations) | Comprehensive gene disruption studies |

| Rare variant masks (MAF < 0.1%) | Focuses on low-frequency variants | Variable | Population-specific association studies |

| Common variant masks (MAF > 1%) | Includes more frequent variants | High (but may tag causal variants via LD) | Initial discovery phases |

Problem: Suboptimal Variant Calling in Non-Human Primate Genomes

Symptoms: Increased false positive variant calls due to limited genomic resources and incomplete alignment postprocessing.

Solution:

- Implement Refinement Models: Apply decision tree-based refinement models (like the Genome Variant Refinement Pipeline - GVRP) that integrate alignment quality metrics and DeepVariant confidence scores [13].

- Alignment Quality Metrics: Monitor specific alignment metrics including read depth, soft clipping ratio, and low mapping quality read ratio to identify potential false positives [13].

- Machine Learning Filtering: Utilize Light Gradient Boosting Model (LGBM) approaches to filter false positive variants, which have demonstrated 76.20% miscalling ratio reduction in rhesus macaque genomes [13].

Workflow Implementation:

Problem: Computational Challenges in Rare Variant Meta-Analysis

Symptoms: Excessive computational time and memory usage when performing phenome-wide rare variant meta-analyses.

Solution:

- LD Matrix Reuse: Implement Meta-SAIGE's approach of using a single sparse linkage disequilibrium (LD) matrix across all phenotypes rather than constructing phenotype-specific LD matrices [4].

- Storage Optimization: Leverage efficient storage formats requiring O(MFK + MKP) storage instead of O(MFKP + MKP), where M represents variants, F represents variants with nonzero cross-products, K represents cohorts, and P represents phenotypes [4].

- Ultra-rare Variant Collapsing: Identify and collapse ultra-rare variants (MAC < 10) to enhance type I error control while reducing computational burden [4].

Table: Computational Efficiency Comparison for Meta-Analysis Methods

| Method | LD Matrix Handling | Storage Complexity | Type I Error Control | Best For |

|---|---|---|---|---|

| Meta-SAIGE | Single matrix reused across phenotypes | O(MFK + MKP) | Excellent with SPA-GC adjustment | Large-scale phenome-wide studies |

| MetaSTAAR | Phenotype-specific matrices | O(MFKP + MKP) | Inflated for binary traits | Studies with limited phenotypes |

| Weighted Fisher's Method | No LD matrix required | O(MKP) | Well-controlled | Smaller datasets with simple traits |

Experimental Protocols

Protocol 1: Comprehensive Functional Annotation Workflow

Purpose: To systematically annotate both coding and non-coding variants from whole genome or exome sequencing data.

Materials:

- Input Data: VCF files from variant calling

- Annotation Tools: Ensembl VEP or ANNOVAR

- Functional Databases: dbNSFP, regulatory element databases

- Computational Resources: High-performance computing cluster

Procedure:

- Primary Annotation: Run VCF files through Ensembl VEP with basic parameters to map variants to genomic features (genes, transcripts) [10].

- Regulatory Element Integration: Annotate non-coding variants with regulatory information using specialized databases for promoters, enhancers, and transcription factor binding sites [10].

- Impact Prediction: Incorporate multiple functional prediction scores (CADD, PolyPhen-2, SIFT, REVEL) for missense variants [11].

- Conservation Analysis: Add evolutionary conservation scores to identify evolutionarily constrained elements [10].

- 3D Genome Context: Optional: Integrate Hi-C data for long-range regulatory interactions when available [10].

Protocol 2: Optimized Masking Strategy Selection

Purpose: To identify the most effective variant masking strategy for gene-level burden tests.

Materials:

- Genetic Data: Whole exome or genome sequencing data

- Phenotype Data: Well-characterized quantitative or binary traits

- Software: GeneMasker script or similar implementation [11]

Procedure:

- Mask Definition: Define multiple masks based on combinations of:

- Functional categories (pLoF, damaging missense, synonymous)

- MAF thresholds (ultra-rare <0.01%, rare <0.1%, low-frequency <1%)

- Prediction algorithms (combinations of CADD, PolyPhen-2, SIFT, REVEL) [11]

- Burden Testing: Perform gene-level burden tests for each mask across target phenotypes.

- Association Counting: Count significant associations (after multiple testing correction) for each mask.

- Strategy Optimization: Identify mask combinations that maximize significant associations while maintaining biological interpretability.

- Validation: Apply optimized masking strategy to independent validation cohort.

Workflow Diagram:

Protocol 3: Rare Variant Meta-Analysis with Type I Error Control

Purpose: To conduct powerful rare variant meta-analysis while controlling type I error, especially for binary traits with case-control imbalance.

Materials:

- Cohort Data: Multiple cohorts with genetic and phenotype data

- Software: Meta-SAIGE pipeline [4]

- Computational Resources: Sufficient storage for summary statistics and LD matrices

Procedure:

- Per-Cohort Preparation:

- Calculate per-variant score statistics (S) using SAIGE for each cohort

- Generate sparse LD matrix (Ω) for each cohort [4]

- Summary Statistics Combination:

- Combine score statistics from all cohorts into a single superset

- Apply genotype-count-based saddlepoint approximation (SPA) for binary traits [4]

- Gene-Based Tests:

- Conduct Burden, SKAT, and SKAT-O set-based tests

- Incorporate functional annotations and MAF cutoffs [4]

- P-Value Combination:

- Use Cauchy combination method to combine P values across different functional annotations and MAF cutoffs [4]

- Result Interpretation:

- Apply exome-wide significance threshold (typically P < 2.5 × 10^{-6})

Research Reagent Solutions

Table: Essential Tools for Functional Annotation and Variant Analysis

| Tool/Resource | Type | Primary Function | Application Context |

|---|---|---|---|

| Ensembl VEP | Software tool | Functional annotation of variants | Mapping variants to genes, predicting regulatory consequences [10] |

| ANNOVAR | Software tool | Variant annotation | Comprehensive functional annotation including coding and non-coding regions [10] |

| dbNSFP | Database | Functional prediction scores | Aggregating multiple functional prediction algorithms for missense variants [11] |

| DeepVariant | Variant caller | Deep learning-based variant calling | Accurate SNV and indel calling using convolutional neural networks [13] |

| SAIGE-GENE+ | Statistical software | Rare variant association tests | Gene-based tests accounting for sample relatedness and case-control imbalance [4] |

| Meta-SAIGE | Meta-analysis tool | Rare variant meta-analysis | Scalable meta-analysis with accurate type I error control [4] |

| GVRP | Pipeline | Variant refinement | Machine learning-based false positive filtering in suboptimal alignment conditions [13] |

Frequently Asked Questions (FAQs)

FAQ 1: What is the core difference between the "Infinitesimal Model" and the "Rare Allele Model" of complex trait architecture?

The two models propose different explanations for the "missing heritability" of complex traits. The table below summarizes their distinct characteristics.

Table 1: Key Models in Complex Trait Genetics

| Feature | Infinitesimal Model (Common Variants) | Rare Allele Model (Rare Variants) |

|---|---|---|

| Core Proposition | Many common variants, each with a very small effect, collectively explain genetic variance [14]. | Many rare, recently derived alleles, often with larger individual effects, explain genetic variance [14]. |

| Variant Frequency | Common (MAF > 5%) [14] | Rare (MAF < 1%) [15] [14] |

| Expected Effect Size | Small to very small per variant [14] | Can be large (e.g., odds ratio > 2) per variant [14] |

| Key Supporting Evidence | GWAS has identified thousands of common variants; collective common variants capture much genetic variance in large studies [14]. | Evolutionary theory predicts deleterious disease alleles should be kept at low frequency; empirical data shows deleterious variants are rare [14]. |

FAQ 2: When should I use a burden test versus a variance-component test like SKAT for gene-based rare variant association analysis?

The choice depends on the assumed genetic architecture of the rare variants within the gene or region of interest.

Table 2: Choosing a Gene-Based Rare Variant Association Test

| Test Type | Underlying Assumption | Optimal Use Case | Potential Pitfall |

|---|---|---|---|

| Burden Test | All or most rare variants in the set are causal and influence the trait in the same direction [1] [15]. | Analyzing a set of likely deleterious protein-truncating variants [15]. | Loss of power if both risk and protective variants are present in the set (effect cancellation) [1] [15]. |

| Variance Component Test (e.g., SKAT) | Variant effects are heterogeneous—meaning they vary in effect size and/or direction [1]. | Analyzing a mixed set of variant types (e.g., missense, regulatory) or when effect directions are unknown [1]. | Less powerful than burden tests when most variants are causal and have effects in the same direction [1]. |

| Combined Test (e.g., SKAT-O) | A weighted combination that does not assume a purely burden or heterogeneous architecture [1]. | A robust default choice when the true genetic model is unknown [1]. | The specific weighting (ρ) may only cover a limited set of combinations [1]. |

FAQ 3: My mixed-effects model for genetic association fails to converge or gives a convergence warning. What steps can I take?

Model convergence issues are common in mixed-model analysis. The following troubleshooting guide outlines a systematic approach to resolve them.

Table 3: Troubleshooting Guide for Mixed-Effects Model Convergence

| Step | Action | Rationale & Notes |

|---|---|---|

| 1 | Try fitting the model with all available optimizers [16]. | Different optimization algorithms may succeed where others fail. This is a standard and recommended practice [16]. |

| 2 | Increase the maximum number of iterations allowed for the optimizer [16]. | The default number of iterations may be insufficient for complex models. There are no side-effects to increasing this number [16]. |

| 3 | Simplify the random effects structure, but only as a last resort and with caution. | Overly complex random slopes can cause convergence. However, removing them can inflate Type I error, so this should be done judiciously [16]. |

| 4 | For extremely large models, utilize High-Performance Computing (HPC) clusters. | While this doesn't reduce computation time per model, it frees local resources and allows multiple models to be run simultaneously [16]. |

FAQ 4: How can meta-analysis improve the power to detect rare variant associations, and what methods are available?

Meta-analysis combines summary statistics from multiple cohorts, increasing the total sample size to detect associations that are underpowered in individual studies [4]. Newer methods like Meta-SAIGE are designed to address specific challenges of rare variant meta-analysis:

- Controls Type I Error Inflation: Uses a two-level saddlepoint approximation to accurately control for case-control imbalance, a common issue in biobank studies of low-prevalence diseases [4].

- Computational Efficiency: Reuses a single linkage disequilibrium (LD) matrix across all phenotypes, drastically reducing computational costs for phenome-wide analyses [4].

- Power: Achieves statistical power comparable to pooled analysis of individual-level data and is more powerful than simpler methods like the weighted Fisher's method [4].

Experimental Protocols

Protocol 1: Hierarchical Modeling for Rare Variant Association

This protocol provides a framework for relating a set of rare variants to a phenotype while incorporating variant characteristics and accounting for heterogeneity [1].

1. Model Specification The hierarchical model can be specified as follows:

- Level 1 (Phenotype Model):

g{E(Y_i)} = X_i^T * α + G_i^T * β- Where

Y_iis the trait for individuali,X_iare covariates (e.g., age, sex, principal components),G_iis a vector of genotypes, andβis a vector of variant effects [1].

- Where

- Level 2 (Variant Effect Model): The effects

βare modeled as random:β ~ N(μ * Z, τ² * I).- Here,

μrepresents the group effect of variant characteristicsZ(e.g., functional annotation scores). τ²represents the heterogeneity effect, or residual variant-specific effects not explained byZ[1].

- Here,

2. Testing Procedure The test for association involves deriving two independent score statistics:

- Score Statistic S_μ: Tests the null hypothesis that the group effect is zero (

H0: μ = 0). - Score Statistic S_τ²: Tests the null hypothesis that the heterogeneity effect is zero (

H0: τ² = 0) [1]. These independent statistics can be combined using methods like Fisher's combination to create a robust omnibus test that is powerful across a wide range of scenarios [1].

Protocol 2: Meta-Analysis of Rare Variant Associations Using Meta-SAIGE

This protocol details the steps for a large-scale, cross-cohort meta-analysis of gene-based rare variant tests [4].

1. Per-Cohort Preparation

For each participating cohort k:

- Use SAIGE to perform single-variant score tests, generating:

- Per-variant score statistics (

S). - Their estimated variances.

- Accurate P-values adjusted for case-control imbalance and sample relatedness using SPA [4].

- Per-variant score statistics (

- Generate a sparse LD matrix (

Ω) for all variants in the regions of interest. This matrix is not phenotype-specific and can be reused across different traits [4].

2. Summary Statistics Combination

- Combine score statistics from all

Kcohorts into a single superset. - For binary traits, apply genotype-count-based SPA to the combined statistics to ensure proper type I error control in the meta-analysis [4].

- Recalculate the covariance matrix of the combined score statistics using the sandwich form:

Cov(S) = V^(1/2) * Cor(G) * V^(1/2), whereCor(G)is derived from the sparse LD matrix [4].

3. Gene-Based Testing and Aggregation

- With the combined statistics, perform Burden, SKAT, and SKAT-O tests for each gene or region.

- Collapse ultrarare variants (MAC < 10) to improve error control and power [4].

- Use the Cauchy combination method to combine P-values from tests with different functional annotations and MAF cutoffs into a final gene-based P-value [4].

The Scientist's Toolkit

Table 4: Essential Research Reagents and Computational Tools

| Tool / Resource | Type | Primary Function | Key Application in Research |

|---|---|---|---|

| SAIGE / SAIGE-GENE+ [4] [17] | Software | Fits mixed models for genetic association. | Accounts for sample relatedness and severe case-control imbalance in single-variant and gene-based tests [4]. |

| Meta-SAIGE [4] | Software | Performs rare variant meta-analysis. | Combines summary statistics from multiple cohorts, controlling type I error and boosting power for rare variants [4]. |

| Hierarchical Modeling [1] | Statistical Framework | Models variant effects as a function of characteristics. | Tests for group-level effects of variant annotations (Z) and residual heterogeneity (τ²), providing insight into association sources [1]. |

| Polygenic Risk Score (PRS) [18] | Risk Metric | Aggregates effects of many common variants. | Stratifies disease risk in the population; can be combined with monogenic risk for improved stratification [18]. |

| Whole Genome/Exome Sequencing (GS/ES) [15] [19] | Data | Captures rare and common variants across the genome. | Primary technology for discovering rare coding and non-coding variants associated with complex traits and rare diseases [15] [19]. |

| Saddlepoint Approximation (SPA) [4] | Statistical Method | Approximates distribution tails. | Used in SAIGE and Meta-SAIGE to calculate accurate p-values for rare variants under imbalance, preventing false positives [4]. |

Scalable Methods and Practical Applications: Implementing Mixed-Effect Models and Meta-Analysis

Frequently Asked Questions (FAQs)

What is SAIGE and what are its primary applications? SAIGE (Scalable and Accurate Implementation of Generalized mixed model) is an R package designed for genetic association tests in large cohorts. It performs single-variant tests for binary and quantitative traits, and its extension, SAIGE-GENE, conducts gene- or region-based tests (Burden, SKAT, SKAT-O). It is particularly useful for accounting for sample relatedness and case-control imbalance in biobank-scale data [20] [21].

Why is it important to account for population structure and relatedness? Population structure (differences in allele frequencies between subgroups) and cryptic relatedness (unknown familial relationships) can cause spurious associations in genetic studies, leading to an inflated false positive rate (type I error). Mixed-effects models control for these confounding factors by including a genetic relatedness matrix (GRM) as a random effect [22].

My analysis has a highly unbalanced case-control ratio (e.g., 1:1000). Can SAIGE handle this? Yes. For binary traits with unbalanced case-control ratios, SAIGE uses the saddlepoint approximation (SPA) instead of the standard normal approximation to calculate accurate p-values, which is crucial for controlling type I error [4] [21].

What is the difference between a burden test and a variance-component test like SKAT? Burden tests assume all variants in a gene-set have effects in the same direction on the trait and collapse them into a single score. They are more powerful when a large proportion of variants are causal with similar effect directions. In contrast, variance-component tests (SKAT) allow variants to have effects in different directions and with heterogeneity. They are more powerful when only a small subset of variants are causal or both risk and protective variants are present [1] [4].

What are the key input files needed to run a SAIGE analysis? The essential inputs are:

- A phenotype file (space/tab-delimited) containing sample IDs, the phenotype, and covariates [23].

- Genotype files in PLINK binary format (.bed, .bim, .fam) for model fitting and variance ratio estimation, or in BGEN format for single-variant tests [20] [23].

- (Optional) A sparse GRM file and its sample ID file if using a sparse GRM to fit the null model [23].

Troubleshooting Common Issues

Problem: SAIGE installation fails due to missing dependencies.

- Solution: Ensure all system and R dependencies are installed. SAIGE requires specific versions of R, gcc, cmake, and R packages (e.g., Rcpp, data.table, SPAtest, SKAT). You can use the provided conda environment file (

environment-RSAIGE.yml) or the Docker image (wzhou88/saige:0.45) for a pre-configured environment [21].

- Solution: Ensure all system and R dependencies are installed. SAIGE requires specific versions of R, gcc, cmake, and R packages (e.g., Rcpp, data.table, SPAtest, SKAT). You can use the provided conda environment file (

Problem: The error "

vector::_M_range_check" occurs when reading BGEN files.- Solution: This error is often related to memory. Try using a smaller

memoryChunkvalue (e.g., 2) when running the analysis [21].

- Solution: This error is often related to memory. Try using a smaller

Problem: P-values for variants with very low frequency (MAC < 3) are unrealistic.

- Solution: This is a known behavior of the SPA test with very low counts. Use a filter (e.g.,

minMAC = 3) to exclude these variants from the results [21].

- Solution: This is a known behavior of the SPA test with very low counts. Use a filter (e.g.,

Problem: Type I error inflation in rare variant association tests for binary traits with low prevalence.

- Solution: Ensure you are using the latest version of SAIGE and its meta-analysis extension, Meta-SAIGE, which applies a genotype-count-based SPA adjustment to control type I error rates effectively in such scenarios [4].

Problem: Long computation time for Step 1 (fitting the null model).

- Solution: You can speed up the process by using a sparse GRM. Additionally, for variance ratio estimation, you can use a PLINK file containing only a small subset of randomly selected markers (e.g., 1000) instead of the full set to reduce memory usage [23].

Experimental Protocol: SAIGE Workflow for Single-Variant Analysis

The following workflow is adapted from the SAIGE documentation for performing a genome-wide association study (GWAS) on a binary trait, accounting for sample relatedness [20] [23].

Workflow Overview: SAIGE Single-Variant Analysis

Step 1: Fitting the Null Generalized Linear Mixed Model (GLMM)

This step estimates the non-genetic effects and the genetic relatedness matrix to be used in Step 2.

- Command: Execute the

step1_fitNULLGLMM.Rscript. - Key Input Parameters:

--plinkFile: Path to the PLINK binary files.--phenoFile: Path to the phenotype file.--phenoCol: Name of the phenotype column in the phenotype file.--covarColList: Names of covariate columns (e.g., age, sex).--traitType: Set tobinaryorquantitative.--outputPrefix: Prefix for output files.

- Output:

- A model file (

outputPrefix.rda) containing the fitted null model. - A variance ratio file (

outputPrefix.varianceRatio.txt) used for calibrating test statistics in Step 2 [23].

- A model file (

Step 2: Performing Single-Variant Association Tests

This step tests each genetic variant for association with the trait, using the null model from Step 1.

- Command: Execute the

step2_SPAtests.Rscript. - Key Input Parameters:

--modelFile: The.rdafile from Step 1.--varianceRatioFile: The.txtfile from Step 1.--bgenFile: Path to the genotype data in BGEN format (with--bgenFileIndexfor the index).--sampleFile: Sample file for the BGEN.--chrom: Chromosome to analyze.--minMAC: Minimum minor allele count to test (recommended ≥ 3).--outputPrefix: Prefix for output files.

- Output:

The Scientist's Toolkit: Essential Research Reagents

Table 1: Key Software and Data Components for a SAIGE Analysis

| Item | Function in the Workflow | Key Notes |

|---|---|---|

| SAIGE R Package | Core software for performing mixed-model association tests. | Requires R and specific dependencies. A Docker image is available for easier deployment [21]. |

| PLINK Binary Files | Used in Step 1 to calculate the Genetic Relatedness Matrix (GRM) and estimate the variance ratio. | A merged set of files across all autosomes is typically used [20]. |

| BGEN Genotype Files | Format for the imputed genotype dosage data used in Step 2 for association testing. | Must be 8-bit encoded. An index file (.bgen.bgi) is required [20] [21]. |

| Phenotype File | A tab/space-delimited file containing the trait and covariate data for all samples. | Must include a column for sample IDs that matches the genotype data [23]. |

| Genetic Relatedness Matrix (GRM) | A matrix quantifying the genetic similarity between all pairs of individuals, included as a random effect. | Can be a "full" (dense) matrix or a "sparse" matrix to improve computational efficiency [23] [4]. |

| Conda Environment / Docker | Containerized environments that package SAIGE with all its dependencies. | Simplifies installation and ensures reproducibility across different systems [21]. |

Meta-SAIGE represents a significant methodological advancement in the field of rare variant association meta-analysis. It addresses two critical challenges that have plagued existing methods: inadequate type I error control for low-prevalence binary traits and substantial computational burdens in large-scale analyses. By combining summary statistics from multiple cohorts, meta-analysis enhances the power to detect associations that may not reach significance in individual studies, which is particularly valuable for rare variants where low minor allele frequencies often limit statistical power in single cohorts [4].

The method extends SAIGE-GENE+, a robust framework for set-based rare variant association tests that accommod sample relatedness and case-control imbalance. Meta-SAIGE builds upon this foundation to enable scalable cross-cohort analysis while maintaining statistical rigor. Empirical validation using UK Biobank whole-exome sequencing data demonstrates that Meta-SAIGE effectively controls type I error rates while achieving power comparable to pooled individual-level analysis with SAIGE-GENE+ [4] [24].

Technical Architecture & Methodological Innovations

Core Analytical Framework

Meta-SAIGE employs a sophisticated three-step analytical pipeline that ensures both computational efficiency and statistical accuracy:

Step 1: Cohort-Level Preparation - Each participating cohort generates per-variant score statistics (S) using SAIGE, which employs generalized linear mixed models to account for sample relatedness. Crucially, this step also produces a sparse linkage disequilibrium (LD) matrix (Ω) that captures pairwise correlations between genetic variants within tested regions [4].

Step 2: Summary Statistics Integration - Score statistics from multiple cohorts are consolidated into a single superset. For binary traits, Meta-SAIGE applies a two-level saddlepoint approximation approach: first at the individual cohort level, then using a genotype-count-based SPA for the combined statistics across cohorts [4].

Step 3: Gene-Based Association Testing - With integrated summary statistics, Meta-SAIGE performs comprehensive rare variant association tests including Burden, SKAT, and SKAT-O. The method incorporates multiple functional annotations and MAF cutoffs, then combines resulting p-values using the Cauchy combination method [4] [25].

Key Methodological Advancements

Meta-SAIGE introduces several innovations that distinguish it from existing approaches:

Reusable LD Matrices: Unlike methods such as MetaSTAAR that require phenotype-specific LD matrices, Meta-SAIGE employs LD matrices that are independent of phenotype, dramatically reducing computational overhead when analyzing multiple phenotypes [4].

Enhanced Type I Error Control: Through its dual saddlepoint approximation approach, Meta-SAIGE effectively addresses the type I error inflation that plagues other methods when analyzing binary traits with unbalanced case-control ratios, particularly for low-prevalence diseases [4] [24].

Ultra-rare Variant Collapsing: To improve power and computational efficiency, Meta-SAIGE collapses ultra-rare variants (those with minor allele count < 10) before testing, reducing data sparsity while maintaining statistical integrity [4] [25].

The following workflow diagram illustrates the complete Meta-SAIGE analytical process:

Computational Efficiency

The computational advantages of Meta-SAIGE are substantial, particularly for large-scale phenome-wide analyses:

Table: Computational Efficiency Comparison

| Metric | Meta-SAIGE | MetaSTAAR | Improvement |

|---|---|---|---|

| LD Matrix Storage | O(MFK + MKP) | O(MFKP + MKP) | Significant reduction by reusing LD matrices across phenotypes |

| Type I Error Control | Well-controlled for low-prevalence traits | Inflated for binary traits with case-control imbalance | Substantial improvement for unbalanced studies |

| Key Innovation | Reusable LD matrices across phenotypes | Phenotype-specific LD matrices | Eliminates redundant computations |

This efficiency stems primarily from Meta-SAIGE's ability to reuse LD matrices across different phenotypes, unlike MetaSTAAR which requires constructing separate LD matrices for each phenotype. When analyzing P different phenotypes across K cohorts with M variants, Meta-SAIGE requires O(MFK + MKP) storage compared to MetaSTAAR's O(MFKP + MKP) requirement, where F represents the number of variants with nonzero cross-products on average [4].

Performance Benchmarks & Experimental Validation

Type I Error Control

Rigorous simulation studies using UK Biobank whole-exome sequencing data demonstrate Meta-SAIGE's superior performance in maintaining appropriate type I error rates:

Table: Type I Error Rates for Binary Traits (α = 2.5×10⁻⁶)

| Method | Prevalence 5% | Prevalence 1% | Sample Ratio |

|---|---|---|---|

| No Adjustment | 4.21×10⁻⁵ | 2.12×10⁻⁴ | 1:1:1 |

| SPA Adjustment Only | 8.75×10⁻⁶ | 1.04×10⁻⁵ | 1:1:1 |

| Meta-SAIGE (Full) | 2.82×10⁻⁶ | 3.15×10⁻⁶ | 1:1:1 |

| No Adjustment | 5.88×10⁻⁵ | 3.01×10⁻⁴ | 4:3:2 |

| SPA Adjustment Only | 1.12×10⁻⁵ | 1.87×10⁻⁵ | 4:3:2 |

| Meta-SAIGE (Full) | 3.04×10⁻⁶ | 3.33×10⁻⁶ | 4:3:2 |

These results highlight Meta-SAIGE's robust type I error control across different disease prevalences and sample size distributions. Methods without proper adjustment, similar to MetaSTAAR's approach, exhibit severe inflation—nearly 100-fold higher than the nominal level for 1% prevalence traits. Meta-SAIGE's application of two-level saddlepoint approximation effectively addresses this inflation [4].

Statistical Power Assessment

Power simulations demonstrate that Meta-SAIGE achieves statistical power nearly identical to joint analysis of individual-level data using SAIGE-GENE+, while significantly outperforming alternative meta-analysis approaches:

- Across various effect sizes and genetic architectures, Meta-SAIGE maintained power equivalent to pooled analysis of individual-level data [4]

- The weighted Fisher's method, which aggregates SAIGE-GENE+ p-values weighted by sample size, showed substantially lower power across all simulation scenarios [4]

- Meta-SAIGE's power advantage is particularly pronounced for very rare variants (MAF < 0.1%) and low-prevalence binary traits where traditional methods struggle [4] [25]

Real Data Application

In a large-scale application to 83 low-prevalence disease phenotypes using UK Biobank and All of Us whole-exome sequencing data, Meta-SAIGE identified 237 gene-trait associations at exome-wide significance. Notably, 80 associations (33.8%) were not significant in either dataset alone, demonstrating the enhanced discovery power afforded by Meta-SAIGE's meta-analysis approach [4] [24].

Technical Support Center

Troubleshooting Guides

Issue 1: Chromosome Range Error in Association Testing

Problem Description Users encounter the error: "chromosome 0 is out of the range of null model LOCO results" when running group-based tests [26].

Diagnosis Steps

- Verify that the LOCO (Leave-One-Chromosome-Out) option is consistently enabled or disabled across all analysis steps

- Check that chromosome specifications in the input files match those in the null model

- Confirm that the genomic coordinates in all input files (VCF, BIM, etc.) use the same reference genome build

Resolution Protocol

- Ensure the

--LOCO=TRUEparameter is included in both Step 1 (null model fitting) and Step 2 (association testing) - Validate chromosome formatting in all input files—ensure chromosomes are numbered consistently (e.g., "1" vs "chr1")

- For targeted analyses, specify the correct chromosome using the

--chromparameter in Step 2 - Regenerate the null model if chromosome information was modified after initial model fitting

Issue 2: Inflation of Type I Error for Very Rare Variants

Problem Description Inflation of test statistics when analyzing very rare variants (MAF ≤ 0.1% or 0.01%), particularly for binary traits with unbalanced case-control ratios.

Diagnosis Steps

- Examine quantile-quantile plots for deviation from the null distribution

- Calculate genomic control inflation factors (λ) for different MAF strata

- Check case-control ratios for extreme imbalance (>1:20)

Resolution Protocol

- Enable ultra-rare variant collapsing by setting the

--col_coparameter to 10 (default in Meta-SAIGE), which collapses variants with MAC < 10 - Apply saddlepoint approximation by ensuring

--is_output_moreDetails=TRUEin Step 2 to generate statistics necessary for SPA adjustment - Utilize the genotype-count-based SPA implemented in Meta-SAIGE for combined statistics across cohorts

- For single-cohort analyses using SAIGE-GENE+, employ the efficient resampling option for variants with low MAC (

--max_MAC_for_ER=10) [25]

Frequently Asked Questions (FAQs)

Q1: What are the key advantages of Meta-SAIGE over MetaSTAAR and other existing methods?

Meta-SAIGE offers three primary advantages: (1) Superior type I error control for low-prevalence binary traits through its two-level saddlepoint approximation approach; (2) Significantly reduced computational burden via reusable LD matrices across phenotypes; and (3) Enhanced power for detecting associations with very rare variants through ultra-rare variant collapsing. Empirical studies show Meta-SAIGE effectively controls type I error while MetaSTAAR can exhibit nearly 100-fold inflation at α = 2.5×10⁻⁶ for 1% prevalence traits [4].

Q2: How does Meta-SAIGE handle sample relatedness and population stratification?

Meta-SAIGE accounts for sample relatedness through generalized linear mixed models (GLMMs) in the cohort-level analysis. Each cohort employs SAIGE to fit null models that incorporate a genetic relationship matrix (GRM). For computational efficiency with large samples, Meta-SAIGE uses a sparse GRM approximation that preserves close family relationships while enabling scalable analysis of hundreds of thousands of samples [27].

Q3: What input data preparations are required from each participating cohort?

Each cohort must provide:

- Per-variant score statistics and their variances from SAIGE analysis

- Sparse LD matrices (Ω) containing pairwise cross-products of dosages for variants in tested regions

- Variant annotation files (marker_info.txt) with functional information The sparse LD matrices are not phenotype-specific and can be reused across different phenotype analyses [28].

Q4: How does Meta-SAIGE improve power for detecting associations with very rare variants?

Meta-SAIGE incorporates several features to enhance power: (1) Collapsing of ultra-rare variants (MAC < 10) to reduce data sparsity; (2) Integration of multiple functional annotations (e.g., LoF, missense) and MAF cutoffs; (3) Combination of Burden, SKAT, and SKAT-O tests; and (4) Cauchy combination of p-values across different annotations and MAF cutoffs. These approaches collectively improve power while maintaining type I error control [4] [25].

Q5: What are the computational requirements for large-scale phenome-wide analyses?

Meta-SAIGE significantly reduces computational burdens through: (1) Reusable LD matrices across phenotypes (storage complexity O(MFK + MKP) vs O(MFKP + MKP) for MetaSTAAR); (2) Efficient C++ implementation with sparse matrix libraries; (3) Ultra-rare variant collapsing to reduce problem dimensionality. For example, analyzing the TTN gene (16,227 variants) required only 7 minutes and 2.1 GB memory with SAIGE-GENE+ compared to 164 CPU hours and 65 GB with SAIGE-GENE [4] [25].

Research Reagent Solutions

Table: Essential Software Tools for Meta-SAIGE Implementation

| Resource | Function | Source |

|---|---|---|

| SAIGE/SAIGE-GENE+ | Generates per-variant score statistics and sparse LD matrices for individual cohorts | GitHub: saigegit/SAIGE [28] |

| Meta-SAIGE R Package | Performs cross-cohort meta-analysis using summary statistics and LD matrices | GitHub: leelabsg/META_SAIGE [28] |

| PLINK Files | Standard format for genotype data input (.bed, .bim, .fam) | Required for SAIGE Step 2 [28] |

| Sparse GRM | Genetic relatedness matrix for accounting sample structure | Generated from genotype data in SAIGE Step 1 [27] |

| Functional Annotations | Variant effect predictions (e.g., LoF, missense, synonymous) | Incorporated in gene-based tests [25] |

Implementation Protocol

The following diagram illustrates the logical relationships between different software components and data types in a complete Meta-SAIGE analysis:

Advanced Applications & Research Implications

Multi-Ancestry Meta-Analysis

Meta-SAIGE supports cross-ancestry analyses through its optional ancestry indicator parameter. Researchers can specify ancestry codes for each cohort (e.g., "1 1 1 2" for three European and one East Asian cohort), enabling the investigation of rare variant associations across diverse populations while accounting for population-specific LD patterns [28].

Conditional Analysis for Independent Signals

To distinguish primary rare variant signals from associations driven by common variants in linkage disequilibrium, Meta-SAIGE incorporates conditional analysis functionality. This feature enables identification of independent rare variant associations by conditioning on specific variants or sets of variants, clarifying the genetic architecture of complex traits [27].

PheWAS-Scale Exploratory Analysis

The computational efficiency of Meta-SAIGE makes it particularly suitable for phenome-wide association studies (PheWAS) involving thousands of phenotypes. The reusable LD matrix approach dramatically reduces computational overhead, enabling comprehensive scans of gene-phenotype relationships across the medical phenome [4] [29].

The methodology presented establishes Meta-SAIGE as a robust, scalable solution for rare variant meta-analysis that effectively addresses key limitations of existing approaches while maintaining statistical rigor and computational practicality for large-scale biobank studies.

Frequently Asked Questions (FAQs)

Q1: What is Saddlepoint Approximation and why is it crucial for genetic association studies with binary traits?

Saddlepoint Approximation (SPA) is a powerful technique in statistics used to approximate probability distributions with a high degree of accuracy, particularly in the tail regions of the distribution. It uses the entire cumulant-generating function of a statistic, leading to an error bound of (O(n^{-3/2})), a significant improvement over the (O(n^{-1/2})) error of the normal approximation [30] [31]. This is crucial in genome-wide (GWAS) and phenome-wide (PheWAS) association studies because these analyses involve testing millions of genetic variants, and binary disease traits (cases vs. controls) are often highly imbalanced (e.g., 1 case for every 600 controls) [30]. In such situations, the normal approximation, used in standard score tests, fails to accurately capture the skewness of the test statistic's distribution, leading to severely inflated Type I error rates (false positives), especially for low-frequency and rare variants [32] [30] [33]. SPA controls these error rates effectively, ensuring the reliability of association signals.